Summary: The massive amount of global construction anticipated to happen in the next 50 years is driving nearly every country to ask how we can positively change the world efficiently and sustainably while meeting societal requirements. Meeting our societal needs will only happen by applying real-world context from GIS with BIM design and construction data to new processes for assessing impact and predicting outcomes.

For the Engineering Summit at the 2019 Esri International User Conference, I was asked to give a plenary talk. Given the high caliber of speakers and presentations that I knew we’d be seeing from teams from GHD, HNTB, and many more, this was a tall challenge. After all, the experts in BIM and CAD integration with GIS are our users and partners. Our development effort and business activities such as our partnership with Autodesk are in direct response to user need, customer interest, market trends, and even legislative demand around the world.

The massive amount of global construction anticipated to happen in the next 50 years is driving nearly every country to ask how we can positively change the world efficiently and sustainably while meeting societal requirements. Most of this construction to support urbanization, human habitation requirements, transportation, and climate change mitigation will be driven by Building Information Modeling (BIM) processes. We are seeing increasing legislative demand to enforce the use of environmental and existing asset context in design and construction projects. Our users and the data that they create will provide essential information and analysis about the impact and expected outcomes of all this new construction. Meeting our societal needs will only happen by applying real-world context from GIS with BIM design and construction data to new processes for assessing impact and predicting outcomes.

Drivers for integration

Since my early career as a software developer and now as a product manager, I have been deeply involved in efforts focused on the exchange of information between the design and construction world and the back office. In these projects, the essential drivers for combining BIM and GIS data have nearly always been the same:

- Provisioning of design and construction teams with geospatial information for context that provides an understanding of initial conditions to help achieve reliable outcomes more efficiently and quickly

- Use of design and construction data in geospatial workflows for more accurate and more efficient planning, operation, performance evaluation, maintenance, and emergency response

- Situational awareness within projects or systems of assets to achieve common understanding and communication of status at any point in design, construction, or operation

Patterns for data interchange

Patterns of data interchange between the GIS and BIM worlds have evolved over time, yet retain fundamental characteristics driven more by business process and need, rather than by technology. All integration patterns have value and none of them are perfect. The complexity of the business need coupled with drastic continuous change in technology has meant that there has not been one data integration pattern or technique that clearly overcomes all others forever.

The basic patterns that we see can be summed up as follows:

- Extract-Translate-Load (ETL)

- BIM as 3D graphics

- Web-to-web client integration

- Extract-Load-Translate (ELT) – Seamless reuse

- Standards-based ETL

- The Standards-based Common Data Environment (CDE)

In this article, I’ll discuss the first four in detail and touch on Standards-based ETL and the CDE pattern briefly, leaving these for future articles.

Extract-Translate-Load (ETL)

ETL is a classic integration pattern used around the world. In ETL, specific data are mapped from one known schema or format into another known schema or format. The process starts with ‘extraction’ in which an application or system queries the source database or data, find the specific content that is needed, and stores it outside the source, typically on the same system or possibly on some intermediary system.

After extraction, data are typically ‘translated’ (some, including Safe Software and the Pragmatic Institute say ‘transformed’ because it’s often more than just dictionary changes) into some other schema that may involve removing missing data, converting variable formats, or even converting information into a different encoding. Finally, the data are ‘loaded’ into the destination system.

Pros

ETL implementation is accessible to developers and configurators who can apply practical problem solving, data architecture, and programming skills to connect disparate formats and produce valuable reports and analyses. ETL solutions are flexible in that they can be tailored on a case-by-case basis to address specific data types and domain problems.

Cons/Challenges

ETL implementations can require ongoing maintenance as business needs and file formats change, creating technical debt that can be costly to the business and difficult to scale. ETL necessitates changing data from one state to another and often results in data loss. ETL systems can have difficulty maintaining bidirectional relationships, especially in cases where data resolution is significantly different from one system to another.

How Esri supports BIM-GIS workflows with ETL

Many Esri customers use tools such as Safe Software’s FME or the ArcGIS Data Interoperability Extension to bridge between diverse formats and specifications. One common application in the BIM-GIS conversation is to exchange data between buildingSmart International’s Industry Foundation Classes (IFC) and asset data in GIS. ETL processes will be required for years to come and the ETL pattern is a key enabler of related markets and technologies, such as Data Science applications. As the BIM market evolves, it’s likely that ETL will become more prevalent over time, not less.

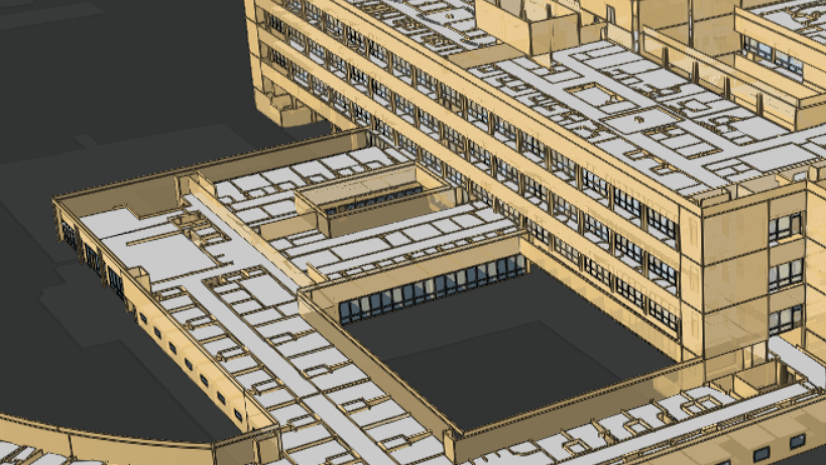

BIM as 3D graphics

High end 3D visuals are becoming expected by customers in many GIS-related domains and this need is becoming acute when merging GIS with architectural or civil engineering projects. For years, architects have been able to generate high quality 3D renderings of projects, producing increasingly realistic renderings of structures long before they exist in the real world. In today’s market, game engine technology has opened up virtual reality (VR) as a user experience that enables immersive review, approval, and even commissioning and training workflows to happen with realistic digital representations of buildings and structures. Whether for realism or for information, many of these projects often require geospatial context to be used as part of the visualization.

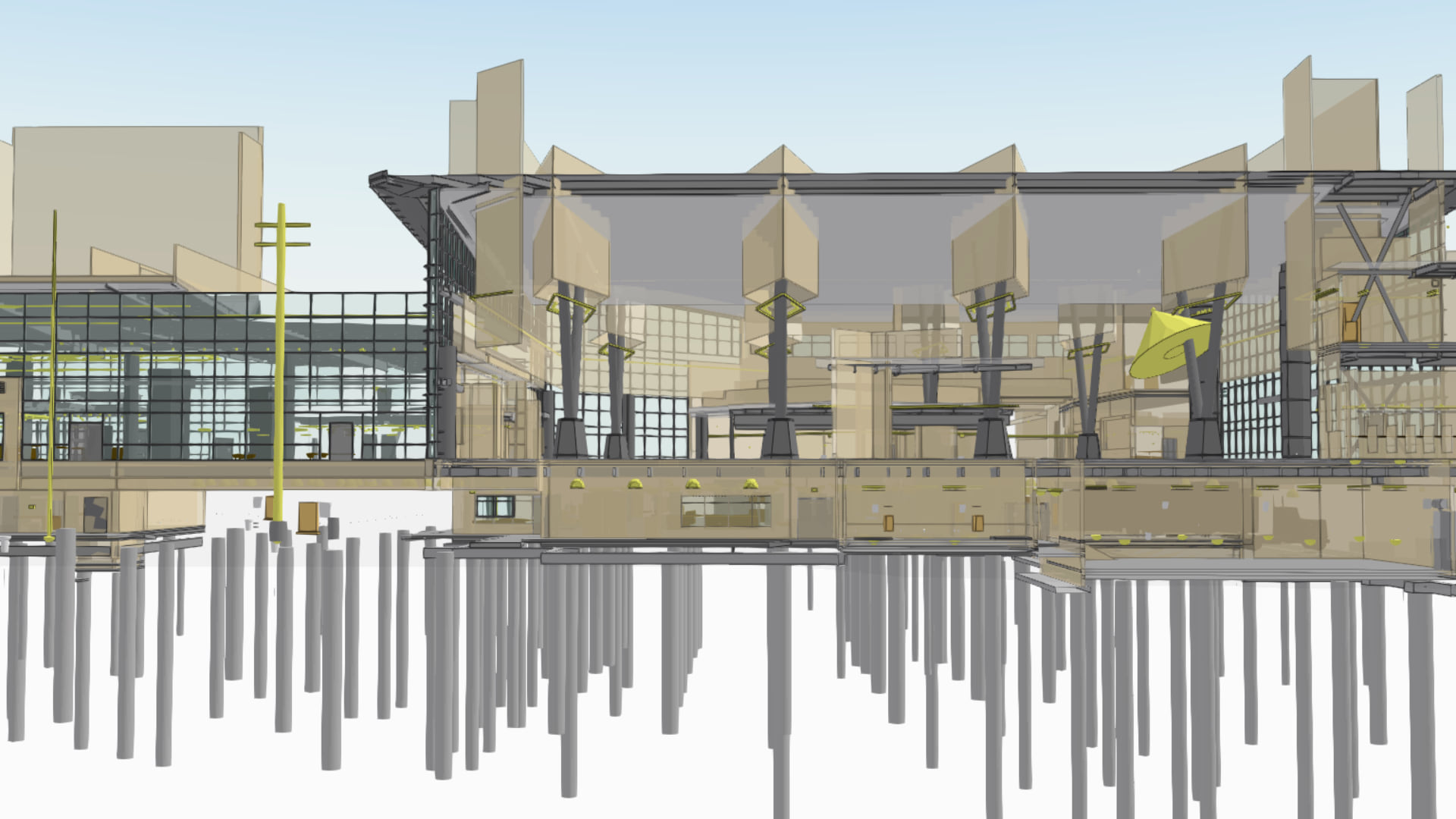

A common emerging workflow that helps bring together 3D BIM content with GIS requires converting the BIM models into common 3D graphics file formats that are consumed in applications such as Trimble SketchUp, ArcGIS Pro, and Unreal Engine. These projects result in compelling visual experiences that help stakeholders understand how a structure will interact with the surrounding environment.

Pros

In many cases, representing BIM as 3D graphics is a quick, effective technique for producing good quality visualizations for presentations and stakeholder review. As workflows using game engines and VR become more prevalent, there is greater need to aggregate 3D content in tools that can be used to create user-friendly experiences. We’re also seeing emerging standards for sharing simple 3D models, such as glTF, become more standard across software tools.

Cons/Challenges

Almost without exception, converting an information rich asset from BIM or GIS into a 3D model format used for game engines and graphics tools will result in loss of attribution and metadata. As a result, some customers will feel that 3D graphics conversion workflows are one directional and of limited value. For these asset formats that typically do not have support for coordinate systems or geospatial coordinates, georeferencing information is stored externally from the model and may be lost during format conversion processes.

Sometimes, we find that a model that was created as separate parts in a BIM application will be fused into a monolithic single 3D model that won’t perform well when displayed in a GIS. Today, many GIS tools will also struggle to display assets with textures or materials that reflect the source content, though this problem is gradually diminishing as GIS vendors, including Esri, increase the visual quality of their application output.

Finally, one of the critical issues of the approach using BIM as generic 3D models is that, for many end users, ‘seeing is believing.’ Because it’s somewhat easy to bring a model into GIS and make it look good doesn’t mean that it is easy to bring along asset metadata and attributes using that same pathway.

How Esri supports the BIM as 3D Graphics approach

Despite some of the limitations of this approach, Esri enables the use of BIM data as 3D graphics, especially to help customers use data in emerging workflows involving game engines, XR experiences, and other applications that focus more on visuals than analytics. The Esri CityEngine team was one of the first non-Epic Games teams to provide a Datasmith workflow to streamline 3D graphics content into Unreal Engine. The CityEngine team uses this to support a VR template project that is available on the UE4 Marketplace.

Esri 3D teams have also been looking closely at glTF. glTF is now directly supported as a marker symbol in ArcGIS desktop and web applications and we are basing some of our upcoming materials spec around the glTF and Universal Scene Description material implementations.

Beyond these early stage features, we’re also exploring more direct game engine integration with popular engines such as Unity and Unreal Engine.

Web-to-web client integration

One of the highest value, yet most subtly difficult, integration patterns that we encounter is the concept of combining web interfaces across multiple systems to provide a common portal or dashboard into spatial, design, and performance data associated with real world assets. Fundamentally, this pattern holds the promise of merging the power of GIS as a communication tool with design and construction data that can inform project communication, smart city operations, and asset management.

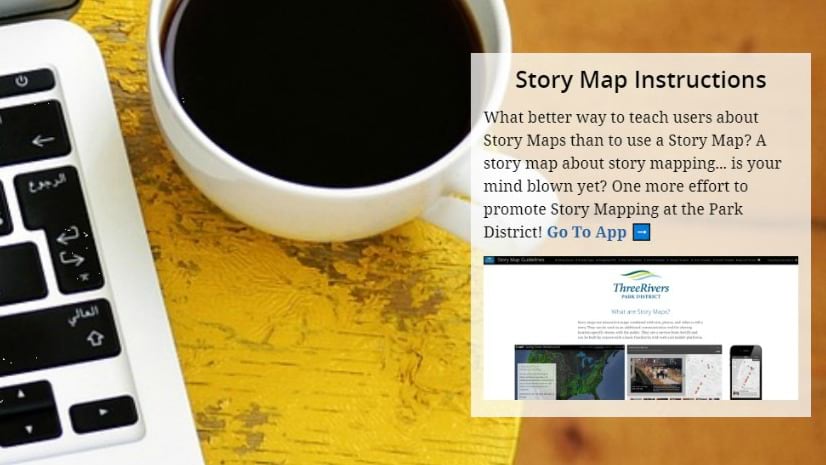

I started putting maps on the internet in the late 1990s and a map view into asset management and design data became a common theme in projects and product efforts that I worked on. We have seen numerous customers and partners use Esri web tools including story maps, Web AppBuilder, and custom JavaScript applications to link to systems such as Autodesk’s BIM 360 and show end users their project or asset data and GIS data in a single experience. Customers ask us repeatedly for a single product that enables this workflow.

The complexity lies in the unique value that each version of a workflow that this type of integration enables for end users. BIM program managers, facility managers, and city operations managers may all need to integrate design data with GIS, yet their fundamental business drivers are different. There isn’t one interface to satisfy them all. That said, working with partners, we have built several versions of this integration and we are working on providing a set of tools to allow anyone to build out the experience they need using ArcGIS and our partners web integration tools and capabilities.

Pros

One of the most appealing aspects of the web-based integration experience is that it is typically designed with the end-user in mind. Each experience implements a tailored workflow to show diverse information such as project cost and schedule status, live asset performance indicators, or worker order status, for example. The user experience is designed to allow users in a web browser to access the information they need when they need it.

Our industry has evolved common techniques and tools that make building web-based integrations accessible to web developers who may not be BIM or GIS experts. Using standard development experiences, developers need to only know where the data is, how to link it together, and how to authenticate into different systems.

Perhaps most importantly, the web-to-web client integration pattern respects ‘system of record’ source of content. The user may be exploring a map served by ArcGIS Online and, upon clicking through an asset and investigating attribute data, may link directly to information in a BIM repository, such as BIM 360.

Cons/Challenges

Despite how simple it may seem to bring together GIS and BIM on the web, there are some common hurdles that can stump even sophisticated developers. In the market today, there is no common indexing scheme or standard interface that allows GIS to be automatically indexed to BIM information, requiring per-owner-operator or per-project indexing patterns.

Commercially developed content repositories, common in today’s market because of the expense of hosting and providing content management tools for massive quantities of data, are typically not co-developed by multiple vendors. Different vendors will implement different authentication patterns and interface technologies.

At the domain level, patterns of data ownership, update, and maintenance drive inconsistencies that require complex data synchronization and extraction patterns. BIM design data, during project inception and construction, will change regularly. Linking to constantly changing data from a record keeping application that has scheduled legal dependencies may be problematic. Many times, data required to solve a specific problem will not be in either the BIM or the GIS and must be sourced from yet another application system with its own idiosyncrasies.

Just about anyone can create a web page showing project information, but making that web page useful or sustainable can be substantially complex.

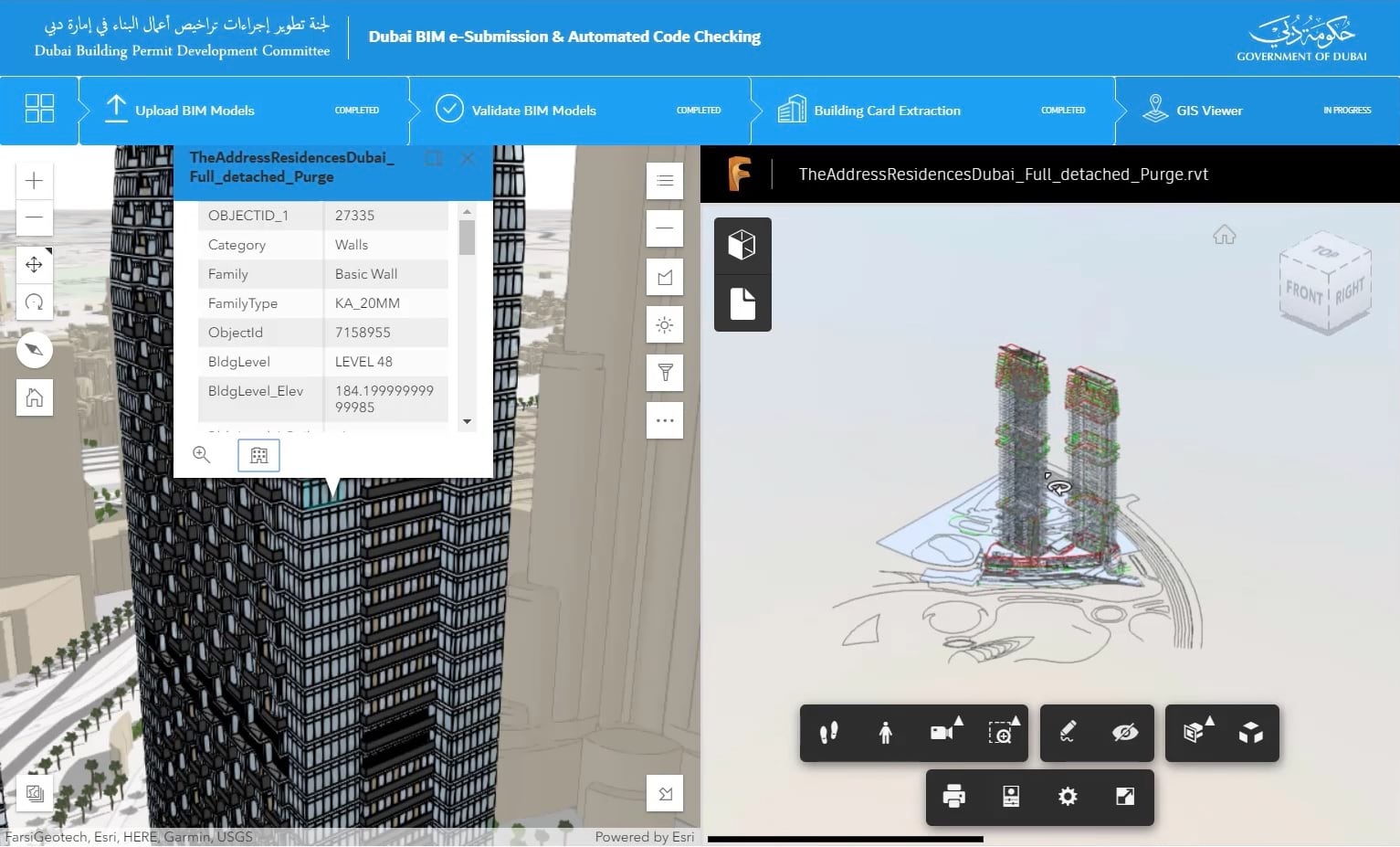

How Esri supports easier web-to-web client integration

ArcGIS has provided tools to build custom web applications, including industry-standard integration tools, for decades. The ArcGIS API for JavaScript enables extensive customization of 2D and 3D web experiences. Tools such as Web AppBuilder and the new ArcGIS Experience Builder, currently in Beta, enable users to create tailored web workflows through configuration.

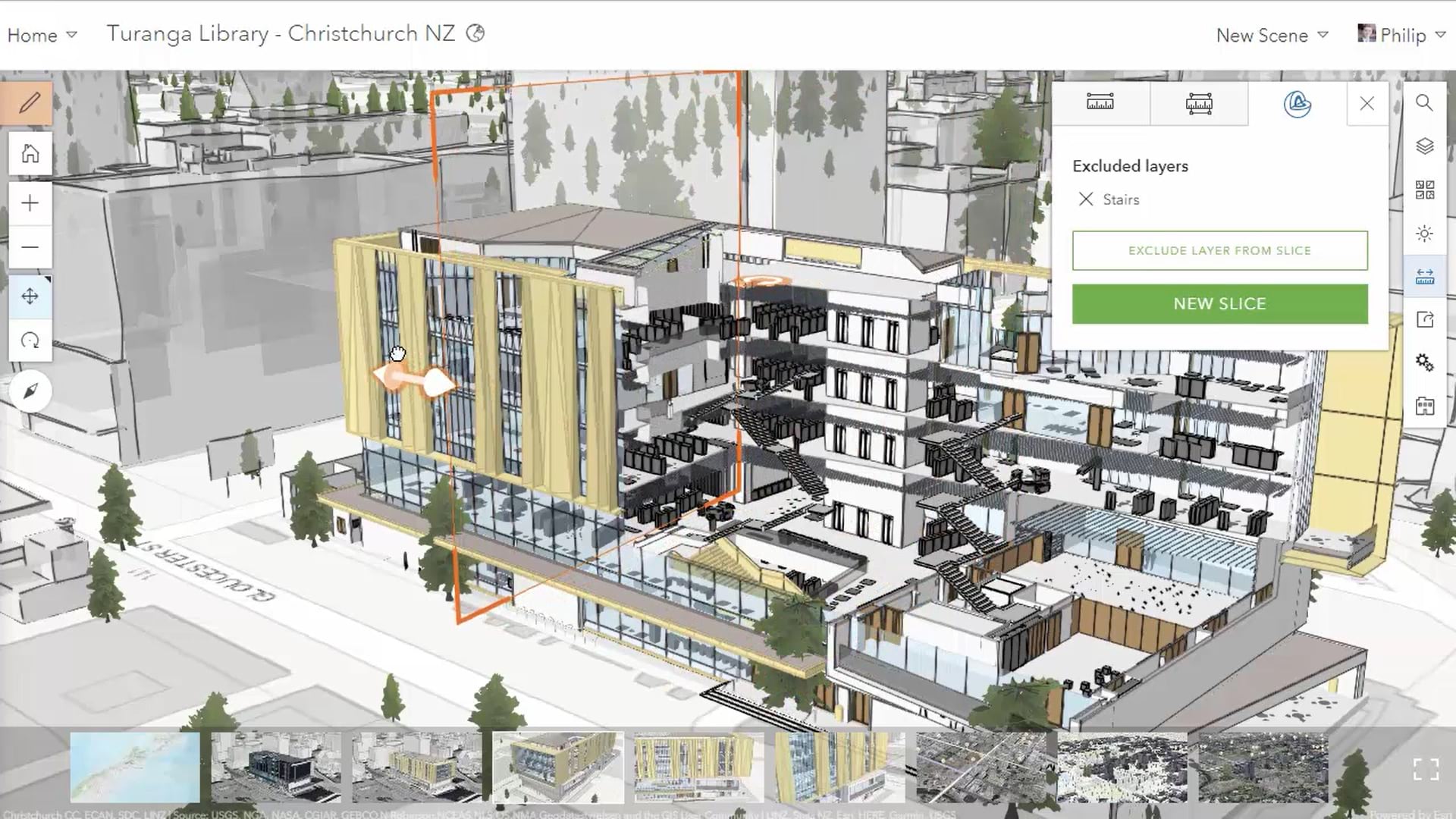

Esri also provides extensive open access to content managed in ArcGIS Online and ArcGIS Enterprise through GeoREST services that encompass data types (layers), analysis (geoprocessing), and content management capability. The new I3S Building Scene Layer is a great example of how we have even introduced new layers and capabilities in ArcGIS specifically for sharing BIM content over the web.

We also have created several versions of custom web apps that bring together BIM and GIS. Currently, these are bespoke and not productized. We have provided sample code to help some partners create their own integration apps and we are exploring how we can create a solution or product that encapsulates the range of our customers’ needs while also preserving flexibility and configurability.

Extract-Load-Translate (ELT) – Seamless reuse

In the last few years, we’ve seen another pattern of integration start to emerge. The ubiquity of storage and rapidly improving capability to read more and more content with standard APIs has allowed some applications to open and read content from a source and then to wait to translate that data for use as native content in the destination application. Data may be directly read from source files or services in their native location or data may be copied, then loaded and translated. In general, these ‘Extract-Load-Translate’ patterns are changing integration from perpetual data loss to opportunistic data reuse.

One example of the ELT pattern is the CAD and Revit file reading capability in ArcGIS Pro. Files can stay in a source file share or be copied to a new location and read directly by Pro. Pro reads the source data and dynamically interprets it as if it is a collection of GIS feature services without changing the source file. From that state, a user may choose to convert the data to another format, such as the I3S Building Scene Layer for dynamic streaming over the web.

At a more sophisticated level, the ELT pattern is a key component of data lakes. If data can be snapshotted (extracted) and then moved to a new curated location (loaded), then at any time it can be read to the best ability of destination software systems (translated). Over time, the expectations are that the quantity of data will grow, the quality of data will improve, and the ability to read and use data for new purposes will improve.

Pros of ELT workflows

ELT workflows are highly desirable to end users because they often hide schema conversion and appear to almost magically allow access and use of data in new applications or systems. Content may appear to be native to the destination, such as in the case of Revit files appearing as GIS feature layers in ArcGIS Pro. ELT enables progressive improved use of source information over time. As ArcGIS Pro is improved to read Revit content, more and more use can be made of the source data with minimal additional work (if any) by the end user.

Depending on implementation, ELT has the potential to respect, or at least preserve relationships back to, the system of record for source content. ELT also facilitates bidirectional workflows through conservation of metadata and data semantics.

Cons/Challenges

Bad data in is not easily able to be overcome and this can be extra painful for users working with ELT technologies because the user experience can appear to be automatic, until it is not. Missing metadata, bad geometry, and old or nonstandard content can cause ELT workflows to fail or underperform. One standout example with Revit content is the common lack of survey point information without which it is nearly impossible to automatically geolocate projects.

ELT requires extensive access to source content which may not be possible with proprietary data sources. ELT also requires extensive knowledge of the source domain and can lead to misperceptions that destination applications can operate on content as well as the native applications that created the content. This will not always be so, especially in cases where extensive parametric or dynamically derived geometry or calculated attributes must be materialized or frozen in destination software tools. ELT is the highest cost capability to create partly because it provides the best end-user experience.

When combining BIM and GIS data, ELT requires program-level BIM specifications to be able to consistently reuse BIM content in applications such as GIS. A GIS can’t magically add the right level information or asset IDs that might be needed for a BIM model to be most useful for asset management after construction. The diversity of AEC and BIM content from the many organizations in the market makes it unlikely that Esri will be able to support ELT workflows for every vendor software or open format. We will have to pick market-dominant formats with solid implementation and rely on other integration workflows to meet the needs of some formats and specifications.

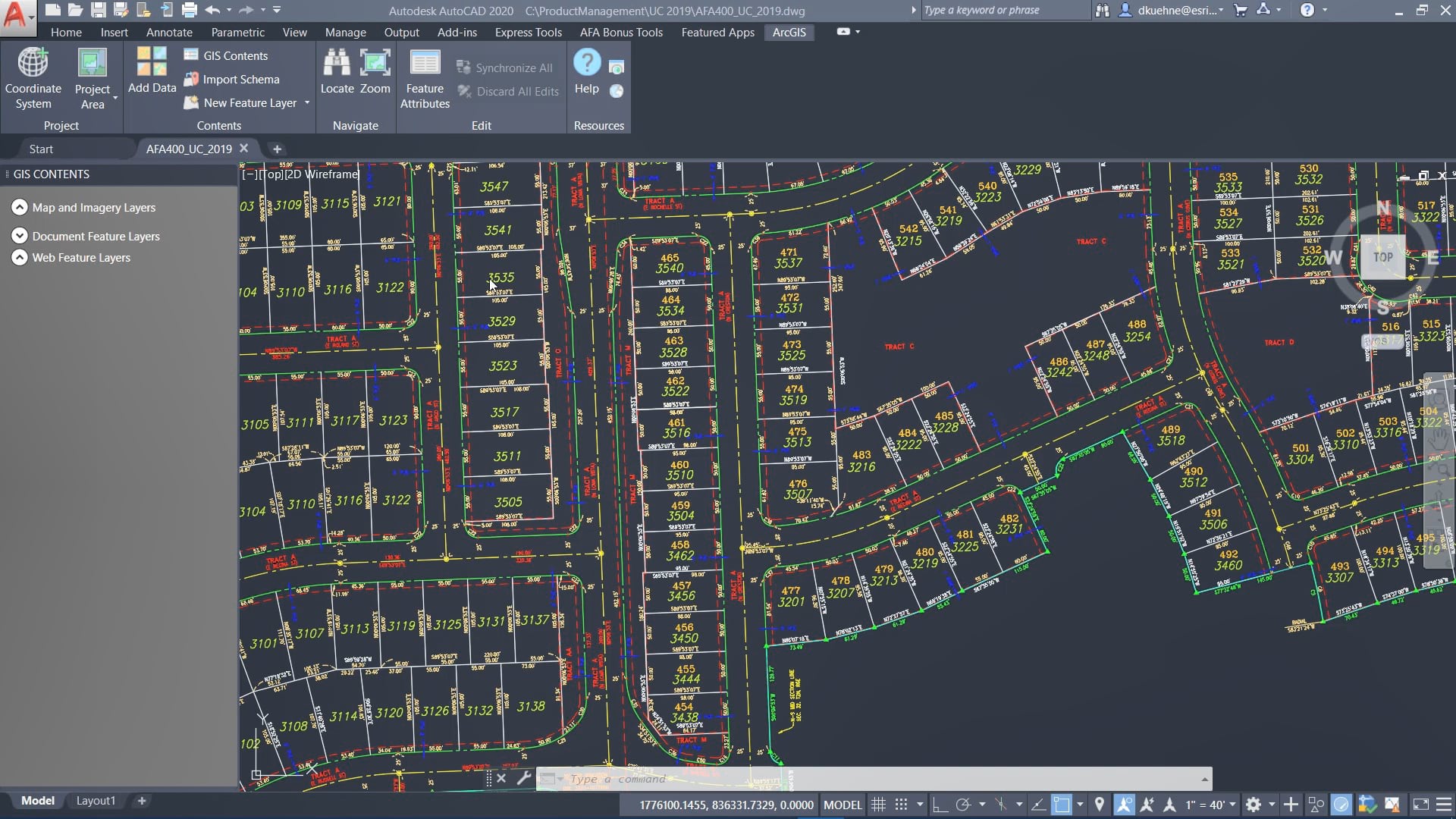

How we plan to support ELT workflows

Esri has been leading the way with ELT workflows for years. ArcGIS for AutoCAD has enabled the direct read of GIS content into Autodesk AutoCAD since 2007. The Autodesk Connectors for ArcGIS bring a similar direct read workflow of GIS into Autodesk Infraworks and Autodesk Civil 3D.

ArcGIS Pro introduced direct read of Autodesk Revit in 2018 and since then we’ve seen remarkable examples of BIM used in GIS workflows which was accelerated when we also introduced the Building Scene Layer in 2019. These capabilities are an example of combining integration workflows to enable entirely new workflows.

For the future, we are planning to implement direct read of additional data types such as Autodesk Civil 3D and IFC. Beyond that we are also working on direct connectivity with content management systems, such as Autodesk’s BIM 360. We will examine support for additional vendor and industry formats based on future availability of APIs or SDKs that facilitate easier access.

And there’s more…

Standards-based ETL

There are other patterns of integration that we see evolving in the industry. One of them is the concept that all information exchange should happen through Standards. This includes specifications such as the Open Geospatial Consortium’s CityGML and IFC. This can be challenging when the standards were created for separate industries and workflows and only later attempted to be glued together. Converting data from one standard to another has similar issues to classic ETL workflows, resulting in data loss because of missing domain or discipline information and mismatch in graphic complexity. Data loss can be magnified when converting out of one vendor format, through multiple open standard formats, and then into another vendor format.

Given the need to evolve software and standards rapidly to meet changing market expectation and technology capability, it’s hard to see that this pattern is going to be able to stabilize in a manner that consistently minimizes data loss for most cross-industry applications of BIM and GIS integration. Esri typically supports these patterns by making use of third-party libraries and tools to read open standard data. Whenever possible, we hope to simplify the number of hops in data conversion by looking at ELT workflows and tools, such as by directly reading IFC into ArcGIS in the future.

The Standards-based Common Data Environment (CDE)

Another trend we see is the attempt to create a single Common Data Environment (CDE) based upon one industry’s specifications or open standards. I’ve, personally, been involved in data centralization and federation projects going back to the late 90’s. The centralized database approach always seems to struggle with issues of data ownership, ability to meet organization change requirements, and legal constraints. As I pointed out in my previous blog, for example, a geodatabase is an unlikely place for stamped and certified engineering drawings.

Cory Dippold, Vice President at Mott MacDonald, and others in the industry instead like to rephrase the term CDE to refer to a “Connected Data Environment” in which federated data remains under control of its appropriate domain owner in an organization and is exposed through standard services for integration and cross-domain access. I strongly support this concept and form of implementation as the most practical way forward for integrating data from multiple domains, agencies, and workflows across our large customers.

Many people naively perceive cities such as New York City, London, or San Francisco as monolithic hierarchical entities that roll all responsibility up into one officer or elected official. Large cities and conurbations are actually highly complex with multiple departments and dependencies, some of which may not be under the purview of the city exclusively. The Port Authority of New York and New Jersey is a great example of this type of organization. As an interstate agency, it exists outside the direct authority of New York City, yet the Port Authority’s influence on the City’s infrastructure, mobility, and quality of life is immense. The only way to combine data from the Port Authority with the City will be through secure, controlled services federated across multiple agencies and departments and exposed to appropriate staff and service providers to effect positive change and provide rapid response to events in the City and its environs.

We do understand that some customers will continue to build toward singular CDE’s for both project delivery and asset management at the city scale. ArcGIS tools work in both circumstances and we will likely discuss this in a later blog article.

Conclusion

The diversity of integration patterns for BIM and GIS data has increased, not decreased, over time. Newer integration patterns, such as ELT, potentially improve user experience and preserve data integrity, but require deeper knowledge of diverse domains such as GIS, architectural design, construction documentation, and asset management. Software vendors are focused on building tools to enable customers to accomplish work and are often not broad experts on the work itself. We are constantly working to improve tools and workflows to enable our customers to get more of their work done, faster, and cost effectively. This requires iterative tool development with continuous feedback and understanding that complexity isn’t untangled with a new button on an interface.

We are also experiencing a time of tremendous change across every technical human domain. Ever increasing volumes of data, transition to more sophisticated modeling and simulation of reality, and machine learning and artificial intelligence are changing our work process and even the quality of our work faster than ever before. This means that our tools and abilities to access data are going to have to change even faster. While I don’t expect that we will be able to provide a single magic technology or data format to instantly expose all the data that an organization will ever need, better understanding of the patterns of data use and integration will help us to respond faster to change and even enable change more positively.

Commenting is not enabled for this article.