This blog will provide an overview of Synthetic-Aperture Radar (SAR) to RGB image translation using the recently implemented CycleGAN model in the ArcGIS API for Python.

Motivation

Consider a scenario in which a cloudy day is preventing the use of optical imagery for earth observation. Synthetic-aperture Radar (SAR) which is an active data collection type provides an alternative method of image capture that can penetrate clouds and produce the desired ground imagery.

While this application of SAR is obviously useful, it is a complex technology with its own hurdles for those unfamiliar with it. Fortunately, deep learning image translation models allow users to convert SAR images to a more easily understandable optical RGB image.

One such model is CycleGAN, which has recently been added to the arcgis.learn module of ArcGIS API for Python. The rest of this blog will go through the steps showing how the model can be used.

It is important to note that the CycleGAN model expects unpaired data and it does not have any information on mapping SAR to RGB pixels, so it may map dark pixels in the source image to darker shaded pixels in the other image which may not be right always (especially in agricultural land areas). If this kind of problem is faced where results are mismatched because of wrong mapping, Pix2Pix model which expects paired data can be used.

Data and preprocessing

The sample data we will be using is a single band (HH) Capella Space’s simulated SAR imagery which we received in tiff format and optical RGB imagery for Rotterdam in the Netherlands. We have converted the single band SAR imagery to 8 bit unsigned, 3 bands raster using the Extract Bands raster function in ArcGIS Pro, which will allow us to export 3 band JPEG images for data preparation.

Exporting training samples

Deep learning models need training data to learn from, so we will use the Export Training Data for Deep Learning tool in ArcGIS Pro to export appropriate training samples from our data. ArcGIS Pro has recently added support for exporting data in the Export Tiles format, which we will be using for this task. This newly added format allows you to export image chips of a defined size without requiring any labels. With this process, we exported 3087 chips, of which we used approximately 90% (2773) images for training and the remaining 308 images for validating our model.

Training the SAR to RGB image translation model

After exporting the training data, we used ArcGIS Notebooks and the arcgis.learn module in the Python API to train the model.

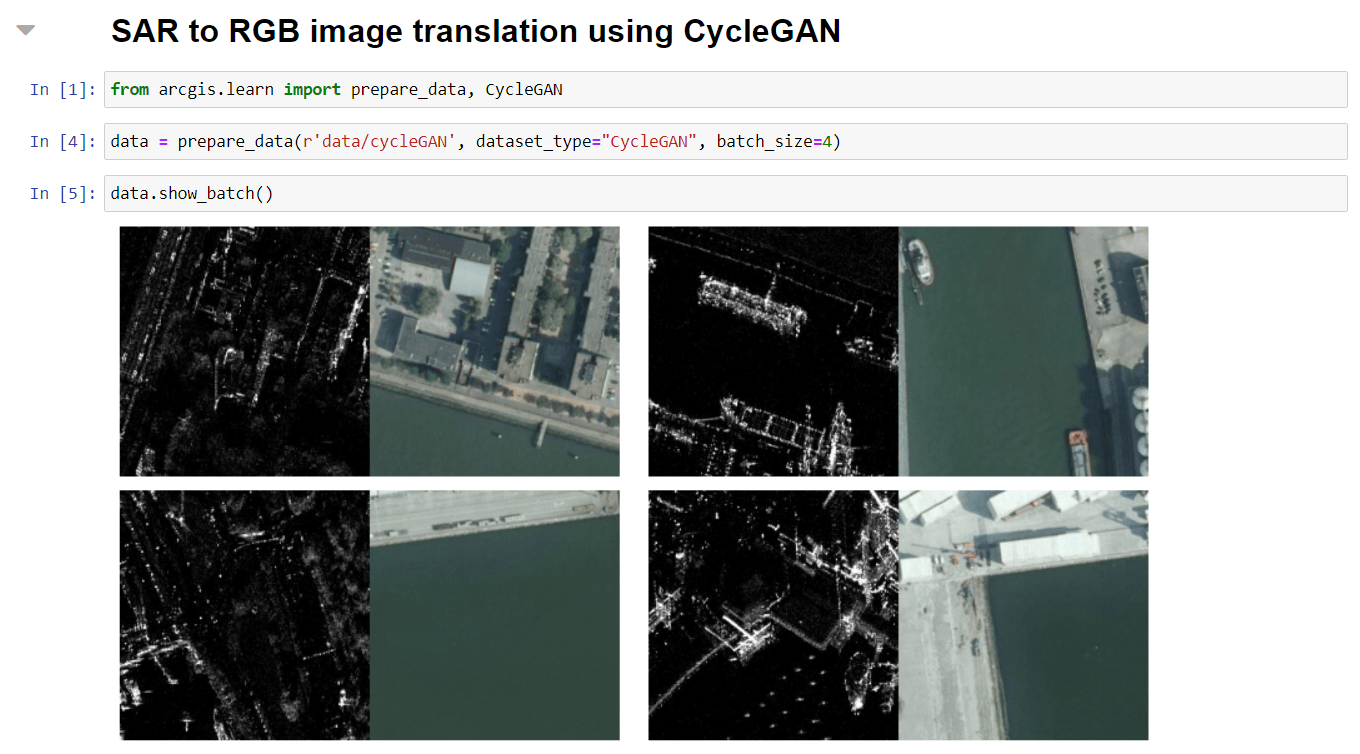

Data preparation

Before we can begin to train our model, we first need to prepare our data. To do this, we used the prepare_data() function available in the API and passed the dataset_type parameter as “CycleGAN”. The prepare_data() function prepares a data object from the training data that we exported in the previous step. This data object consists of training and validation data sets with the specified transformations, chip size, batch size, split percentage, etc.

Model Training

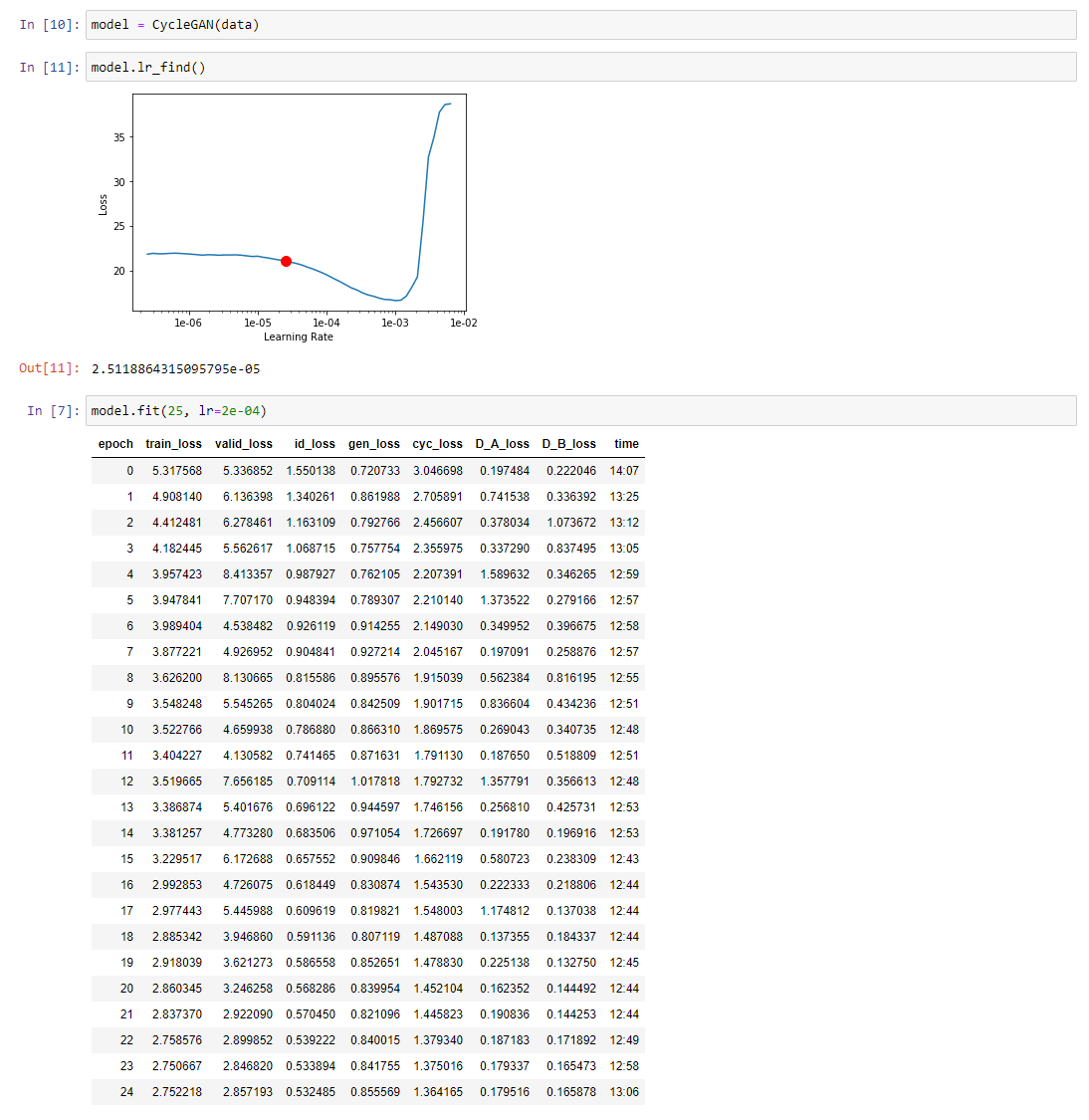

After we prepared the data, we then began the process of training our model using the fit() method in the API.

From the statistics in the figure above, we can see that our validation loss continues to decrease with each epoch of training. The resulting validation loss of our initial 25 epochs still left room for improvement, so we trained the model for an additional 25 epochs.

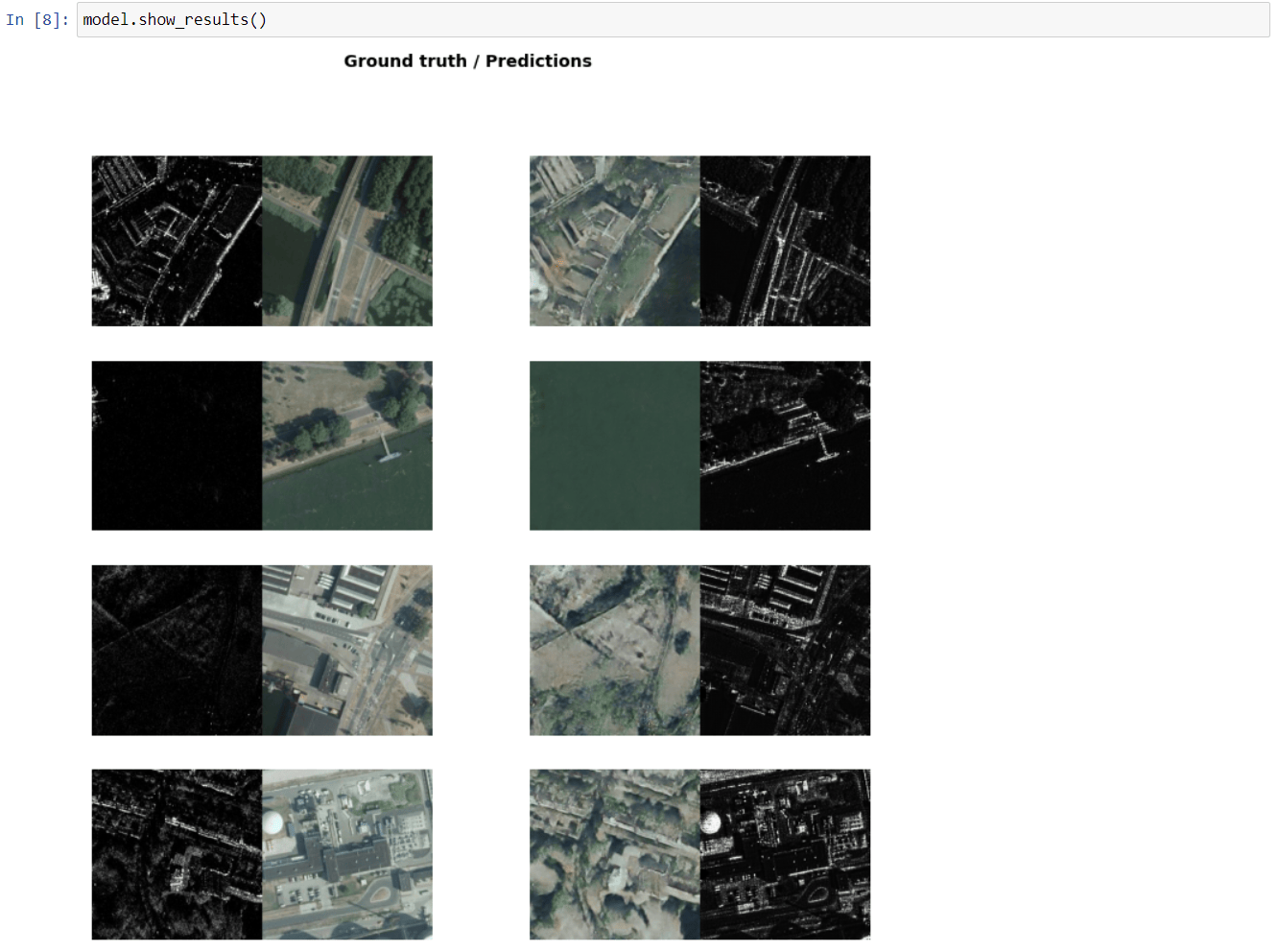

Visualize the results

Next, we will validate the model by visualizing a few samples from the validation data set we prepared by simply calling show_results() using the API.

After training for 50 epochs, the results in the screenshot above indicate that our model was trained well and can realistically convert SAR to RGB images, as well as RGB images to SAR.

Inferencing

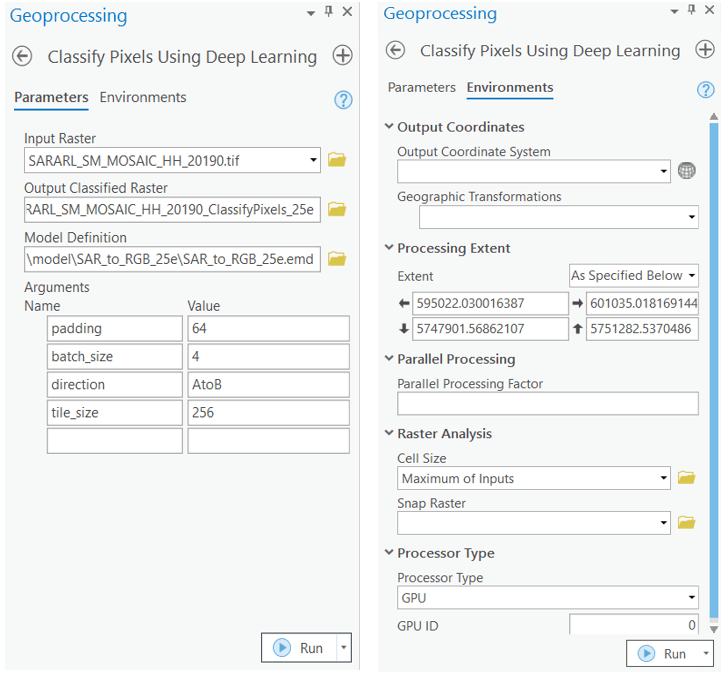

Once we were satisfied with the results of our trained model, we performed inferencing on a larger scale to convert SAR imagery to RGB. We did this by using the Classify Pixels using Deep Learning tool available in ArcGIS Pro.

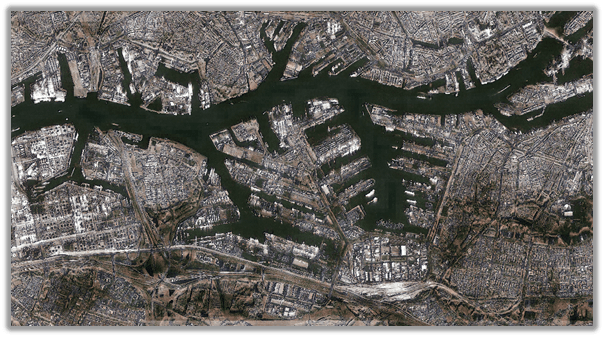

The resulting inferenced imagery is presented in the figure below. You can observe that the SAR imagery is now more interpretable by humans after being translated to optical imagery using the model.

Conclusion

In this post, we have seen a practical application of using generative deep learning to convert Synthetic-aperture Radar (SAR) imagery to optical RGB imagery. This is made possible through the image-to-image translation models like CycleGAN in the arcgis.learn module of ArcGIS API for Python.

Earth observation is an important, yet challenging task, especially on cloudy days. SAR to RGB image translation using models like CycleGAN can be a great tool to overcome the limitations of optical imagery. The exercise shows how generative deep learning models can help us reap the benefits of SAR imagery even on cloudy days.

Article Discussion: