Updated 6/13/2023

- Describe how to properly uniquely identify features within the Connectivity Results

- Added note about new functionality added in ArcGIS Enterprise 11.1

- Added a section to describe the Source Mappings element

Introduction

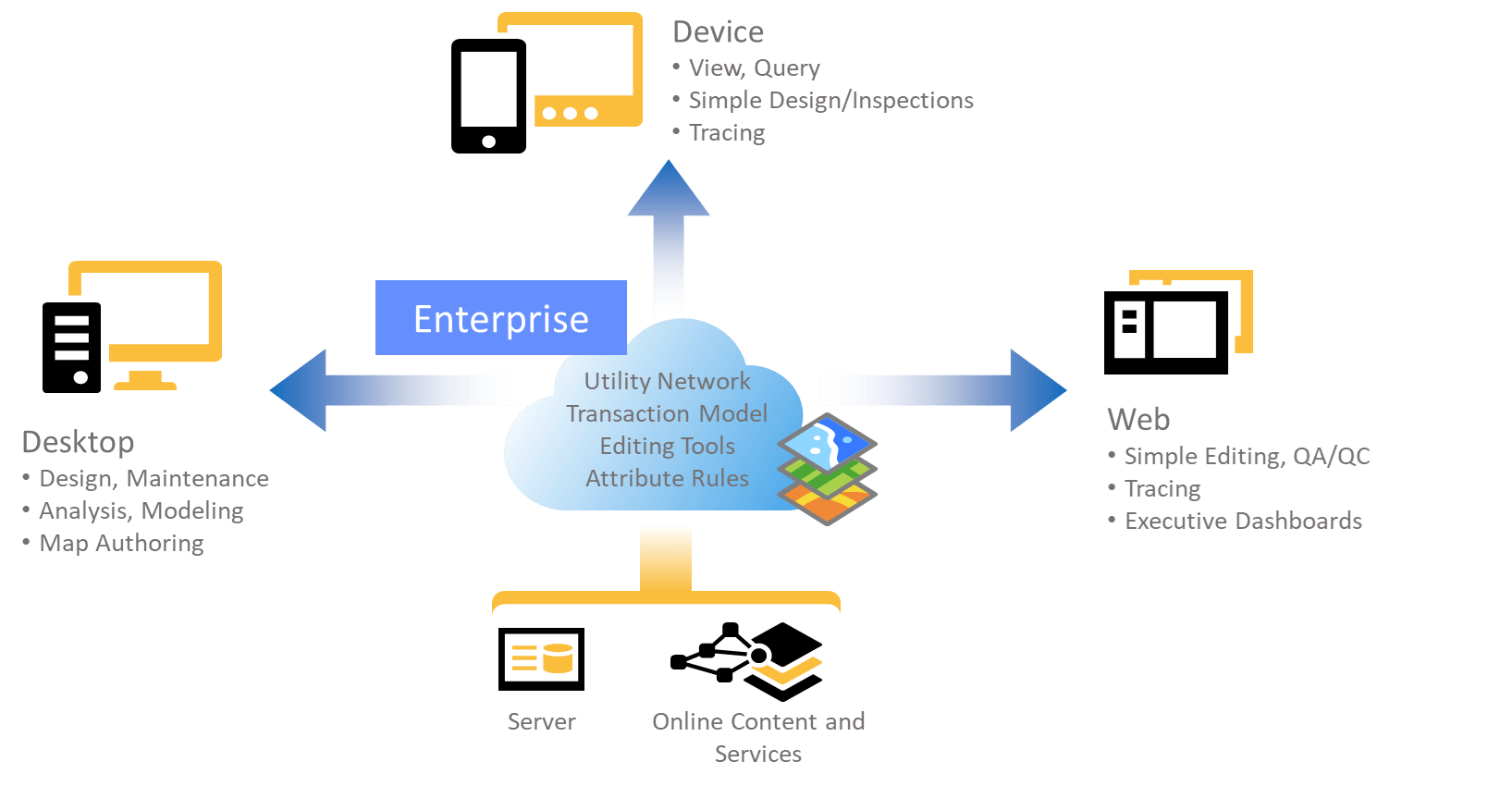

Enterprise GIS is a powerful tool for integration because it allows a company to model the logical, spatial, and topological relationships for all their data. At utility companies this is especially important because the GIS is the system of record for how the network distributes resources customers. In earlier articles we have provided an overview of the integration strategies, as well as examples of common utility network integrations.

This article will show GIS Analysts and Developers how to extract network information from a utility network for analysis or to or provide the topology to other systems by answering the following questions:

- What are the tools used to extract network data from the utility network?

- How can I control and use the output of these tools?

- What are the performance considerations for this approach?

Extracting Network Data

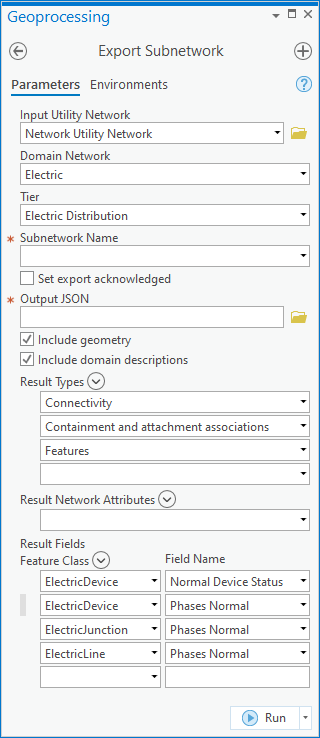

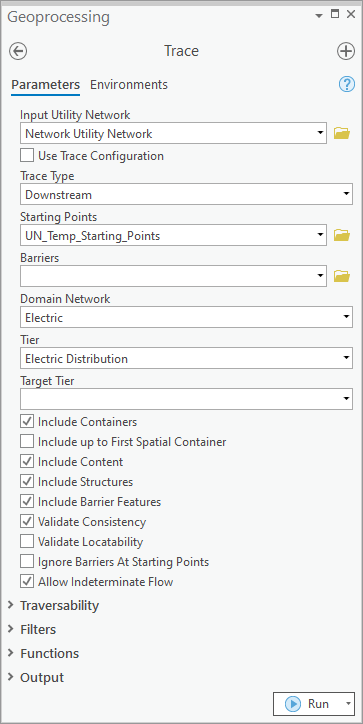

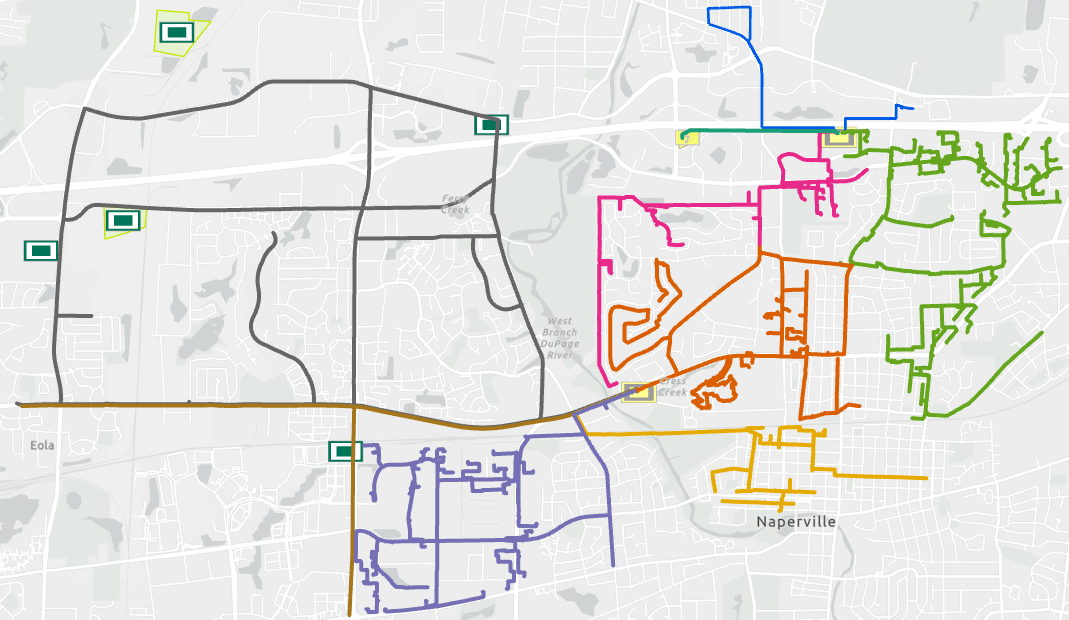

The two recommended techniques for extracting network data are to use the Export Subnetwork and Trace methods of the utility network. You can get familiar with these methods using geoprocessing tools in ArcGIS Pro. Once you’re comfortable with these methods you should review the APIs outlined in our integrations overview article, to see which API best matches the capabilities and performance required by your interface.

Export Subnetwork

The export subnetwork tool allows you to extract all the connectivity and network feature information for a specific network. If you just need to know which features belong to or support a subnetwork it is much easier and faster to use attribute queries to extract this data. The export subnetwork tool shows a fine-grained model of how features connect in the network. Engineering analysis and outage management systems often rely on this tool to import connectivity and features from the GIS.

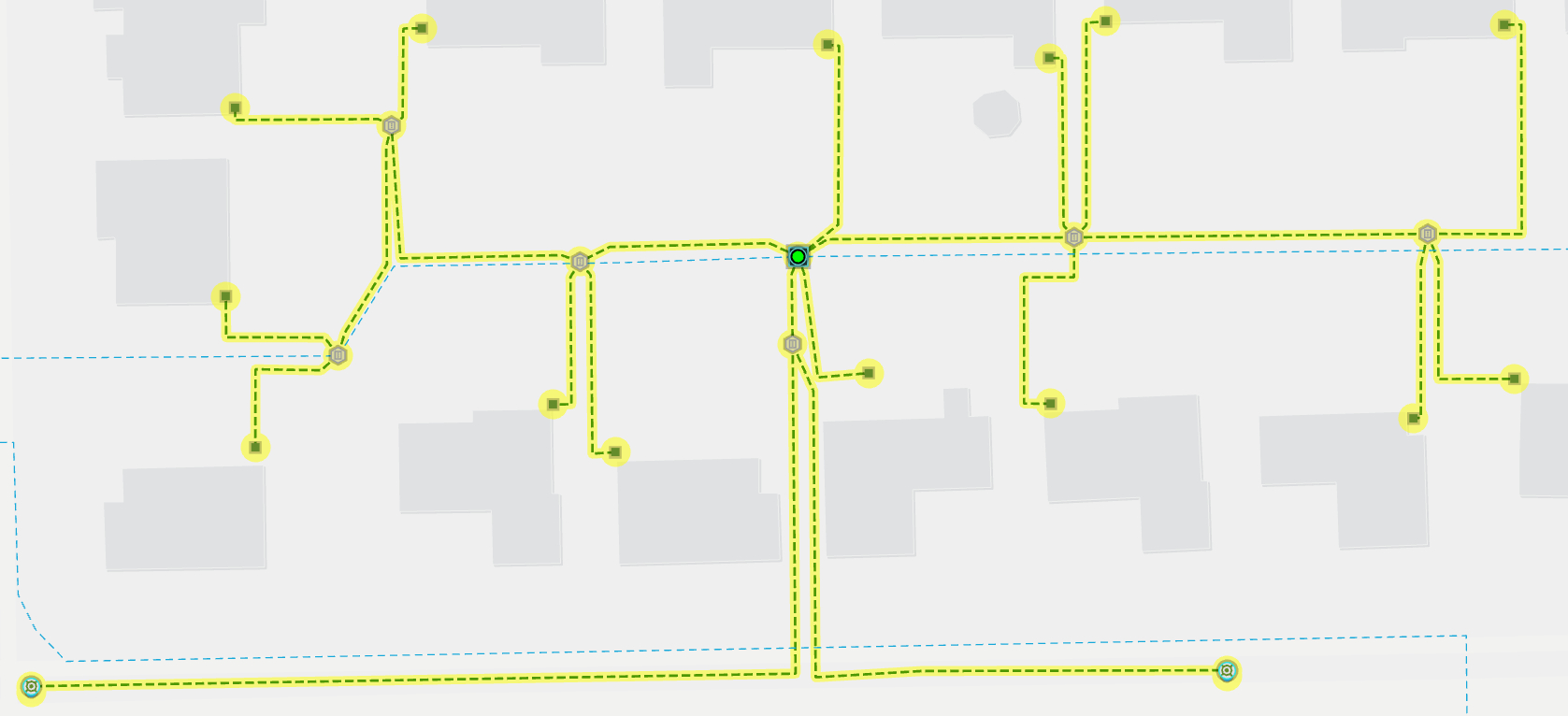

Esri has already published several blogs that go into detail on how to run and consume the output of this tool that we recommend you read: Exporting Subnetworks Part 1 and Exporting Subnetworks Part 2. We encourage you to read these two articles since they provide one of the most comprehensive descriptions of how to use the export subnetwork method. We have outlined several improvements made to the APIs since that article was written in the parameters and output section of this article. You can experiment with this tool and its parameters using the Export Subnetwork geoprocessing tool pictured below.

Trace

Update: The ArcGIS Enterprise 11.1 release introduced the ability to export connectivity, containment, and feature result types. This brings its capabilities in line with the Export subnetwork functionality with the added benefit that it can be run on anything in the network, including a subnetwork that isn’t clean. This last use case is particularly important for customers who don’t manage the status field on their subnetworks (the Manage IsDirty configuration in their subnetwork definition is set to False) since this is the only way to export JSON for these subnetworks.

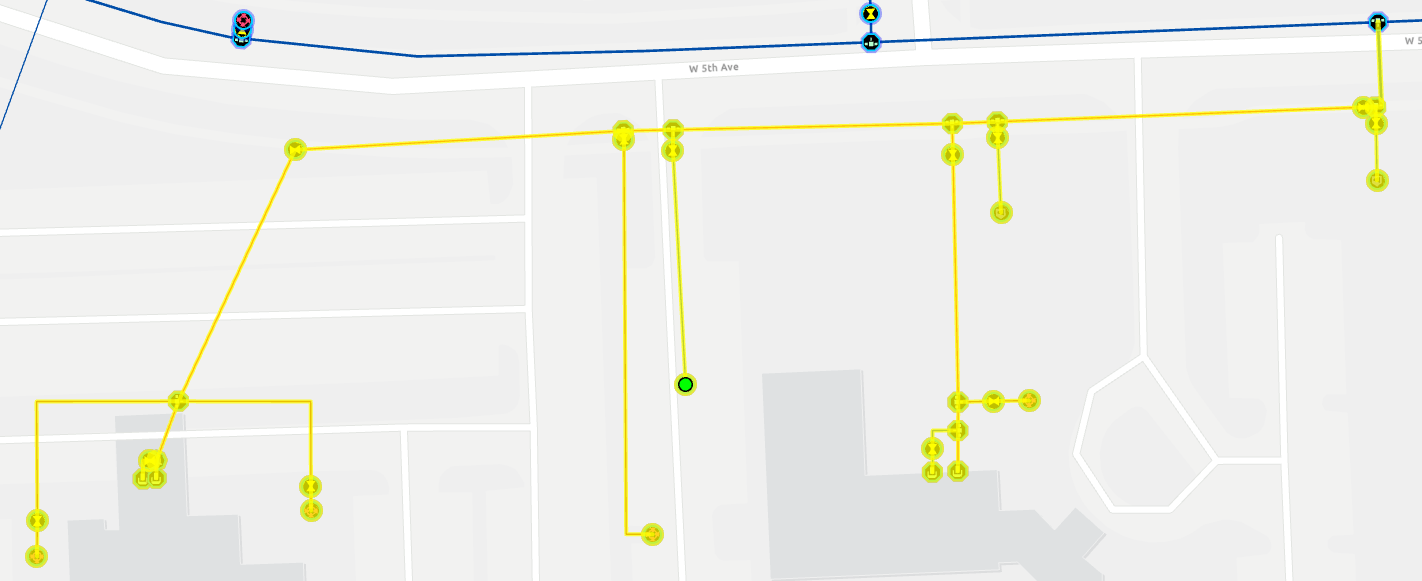

When the data you need to extract is in a single network the export subnetwork tool is the obvious choice for flexibility and ease-of-use. You should use the trace tool when you need to extract or analyze data from your network using other criteria, like everything downstream of a certain device. The trace tool will query network features, perform basic network analysis, and output the results to JSON in memory or to a flat file. This is one of the most powerful and challenging tools you can use to develop an interface because it requires a strong understanding of the network model. You must carefully design interfaces with scalability and performance in mind when using this tool, you can read more about this in the performance section of this article.

Customer Information Systems (CIS) and GIS often share information about how a customer connects to the network. This information often includes information about the location of the meter as well as how and where the customer connects to the network. As the mappers edit the GIS this information will change and the only way to truly compare these two systems is by using traces to confirm the topology in the GIS matches the information in the CIS. Depending on how large your network is this would require tens or hundreds of thousands of traces every night. I describe several techniques below that will help you design your interface to scale and meet these challenges.

Parameters and Output

The trace and export subnetwork tools have many parameters that control their output. We will describe some of the most important parameters here, along with a description of how to use the output of these tools. The export subnetwork tool has historically provided more options for how to output results, but the trace tool continues to be enhanced to provide more options for outputting results. Below we describe how to best use the following parameters when performing network analysis:

- Trace Configurations

- Source Mappings

- Attribute Descriptions

- Containment and Attachments

- Admin Export

- Connectivity Results

- Starting Locations

Trace Configurations

A trace configuration allows you to control how a trace behaves and which features should be returned. Every network has a default configuration that is used when you trace, validate, or export a subnetwork. The trace and export subnetwork tools allow you to pass in your own trace configurations that alter this behavior.

The trace tool requires that you pass in a valid combination of starting locations and a trace configuration. Because these configurations can be quite complicated, we added a feature at 10.9.1 to persist named trace configurations in the database and reference them in the trace tool. When possible, your interfaces should use named trace configurations stored in the database. This is because it gives visibility of the configuration to a GIS SME who can test and maintain the trace configuration if any configuration changes are made to your utility network

It is possible, but uncommon, to use a different configuration when exporting subnetworks. In our next article we show how you use a different trace configuration to include proposed or normally de-energized features in your exports.

Source Mappings

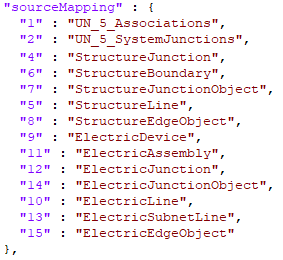

Any time a network feature is referenced in the JSON output it includes the Object ID, Global ID, and Network Source ID of the feature. While the meaning and purpose of the Object ID and Global ID values is well understood by most GIS professionals the Network Source ID is something unique to the utility network.

The Network Source ID is an integer that uniquely identifies a layer that acts as a network source for a given utility network and is persisted in the geodatabase. This id is useful because it allows you to identify a particular layer (device, junction, etc) without needing to refer to the feature class name, layer id, or some other identifier that could potentially change.

The next question is, of course, how do you translate this network source id into an actual layer name when you do need to understand what layer a particular network feature is associated with? Any time the connectivity, containment, or features result type is selected the JSON output will also include a Source Mappings element. This element is typically located at the bottom of the file and allows you to look up the network source name associated with each network source id.

Outside of using these JSON files, you can also get the network source ids for a particular utility network by reading the schema for the utility network. This can be done in ArcPy by getting the utility network properties and looking at the ID and name for all the edge and junction sources in your utility network. You can also access this information from the rest endpoint by calling the Query Data Elements operation on the feature service and passing in the layer id of your utility network.

Attribute Descriptions

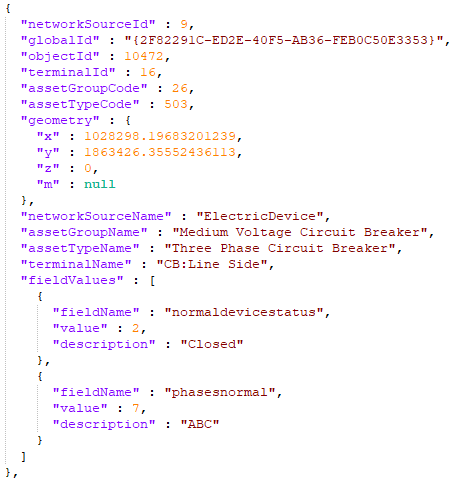

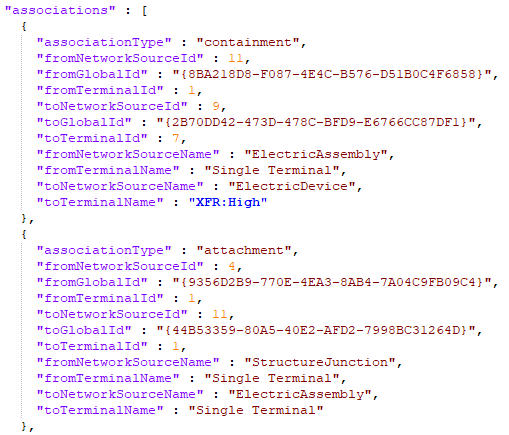

One of the biggest enhancements to the Export Subnetwork tool in 10.9.1 was the ability to include attribute descriptions. This feature is currently not available in the trace tool. When you select this option it will include coded value descriptions for attributes in your exported file. This is an important feature for integrating with non-GIS systems since it provides the underlying database values along with human readable values. This makes the file much easier to consume and understand for users who aren’t familiar with the inner workings of GIS. Here you can see a json sample that includes attribute descriptions.

Containment and Attachment

By default, the export subnetwork tool only extracts the devices, lines, and junctions that move resources in your network. When your trace configuration includes containers and structures, the tool will include the structures and assemblies that contain or are attached to features returned by your analysis. This is important because it will allow you to automatically include important assets like vaults and assemblies that have important attributes about the network features. You can see an example of the output in the included json sample.

Unfortunately, the tool doesn’t extract related non-network data. If you need to include information related to your network features, you will need to use one of the approaches outlined in the earlier articles for extracting GIS information.

Admin Export

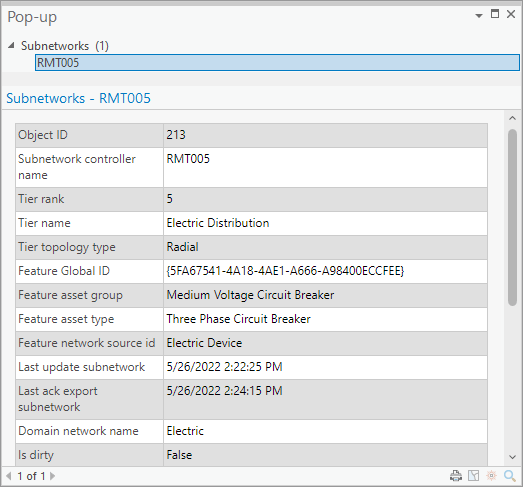

The export subnetwork tool has an option to export with acknowledgement, also known as an admin export. Running the tool in this mode will update the last exported timestamp on the corresponding subnetwork record. This timestamp makes it easy for any integration, even ones that don’t trace, to limit their processing to only the networks that have been updated since they were last exported.

Connectivity Results

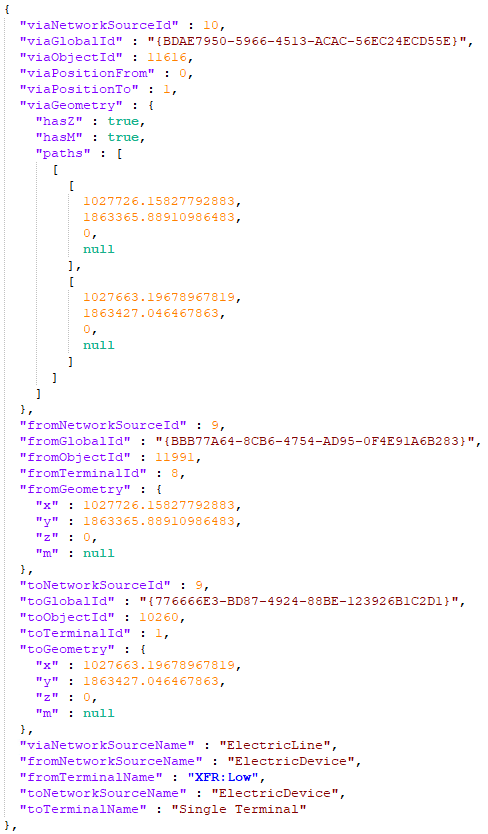

Connectivity is one of the most common, yet most complex result types for trace and export subnetwork. This result type adds an undirected graph of the topology of the network to the trace result. There are plans in future releases to add a result type for a directed graph, but this has not been implemented as of the writing of this article.

A junction that has multiple terminals may be represented multiple times in the JSON output. When analyzing JSON files, you should uniquely identify junctions using the Network Source ID, Global ID, and Terminal ID of the junction of the from/to elements.

An edge element that has a connectivity policy of Any Vertex may have junctions or devices connected midspan. In this case, there may be multiple connectivity elements within the JSON to represent each segment of the edge. When analyzing JSON files, you should uniquely identify edges using the Network Source ID, Global ID, Position From, and Position To of the via element.

The from and to elements included in this export are based on the from/to location on the via element. In the case of a line this will be its first/last vertices and in the case of an association this will be the from/to elements. To determine flow direction (upstream / downstream) using this structure you must manually trace the connectivity while respecting the condition barriers and propagators of the network. You can see an example of the json connectivity elements in the file below.

Starting Locations

An important consideration when designing your interface is how you will identify the starting location for your traces. A common request is to analyze customer connections in the network. For network topologies that have a high cardinality of customers to a tap location you will need to execute many more upstream traces to produce the same results as a single downstream trace. I provide examples of this for electric, gas, and water below.

For electric networks we are most often tasked with identifying the transformer that each customer is connected to. If you are doing a system-to-system comparison, or are updating all the information on a specific subnetwork, it is most efficient to performing a downstream trace for each transformer on the circuit to discover its customers. Because it is not uncommon for a single transformer to serve four or more customers you would need to perform four times as many upstream traces to produce the same results.

Water and gas networks face a similar problem to find the service lines for a customer. Pipe networks don’t always have a clear definition of tap locations, and because there may be branch or yard lines between the customer and the main you can’t always trust spatial analysis. Because most taps serve 1 to 2 customers tracing upstream from the customer should be acceptable for most cases. You may want to develop separate logic for handling situations like apartment complexes or complex commercial areas.

Performance Considerations

When learning a new API, a common exercise is to benchmark several different approaches to see how it performs in different scenarios. In general, simple approaches tend to work well on small and medium sized datasets. Large datasets often require using different techniques that minimize overhead and rely on methods like incremental processing and parallelism to meet performance requirements.

Attribution

Trace and export subnetwork interact with the network index, and because of this there is a lower cost for these operations to return the indexed network information (elements, connectivity, etc). However, there is a higher cost associated with extracting information about features because this information must be retrieved from the other tables which requires additional queries.

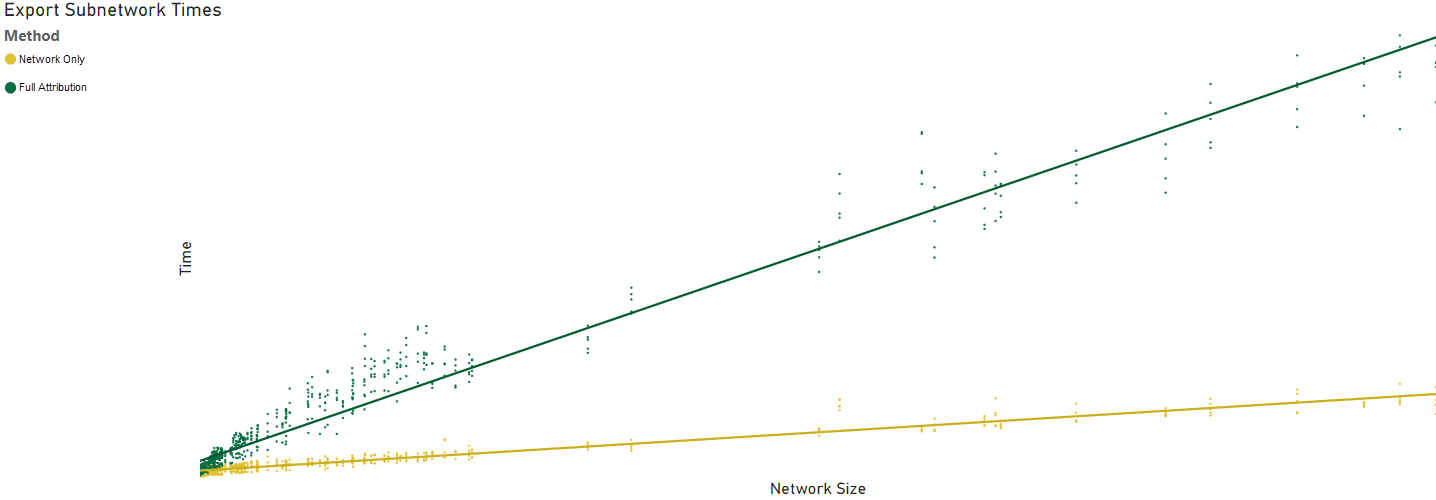

During development, or when dealing with smaller datasets, you shouldn’t be as concerned about the cost of convenience of including this extra information. However, you will need to examine the cost of these choices when considering scaling. If your interface only needs to know which features are in a network or have a specific topological relationship (upstream / downstream / isolation) then you can improve performance by using result types and trace configurations within each tool to exclude geometry, attribution, and unnecessary features from your results. The following screenshot shows an example of a benchmark between two exports on networks of difference sizes: one of these exports only returns indexed network information and the other includes all the feature information.

Disk and I/O

You should also be careful about doing any sort of IO or read/write in a tight loop. If you’ve already designed your interface to be incremental you could do all your IO and queries at the beginning and end of a batch, instead of for each individual trace. This is especially important when you need to query related tables, push updates back to the database, or write files to disk. C#, Python, and JavaScript all have different libraries that they use for interacting with file systems and serializing / de-serializing output and you should exercise caution when selecting your libraries as they can seriously affect performance. You should consider benchmarking your libraries or approaches because you may find that certain libraries or methods can provide significant advantages over others.

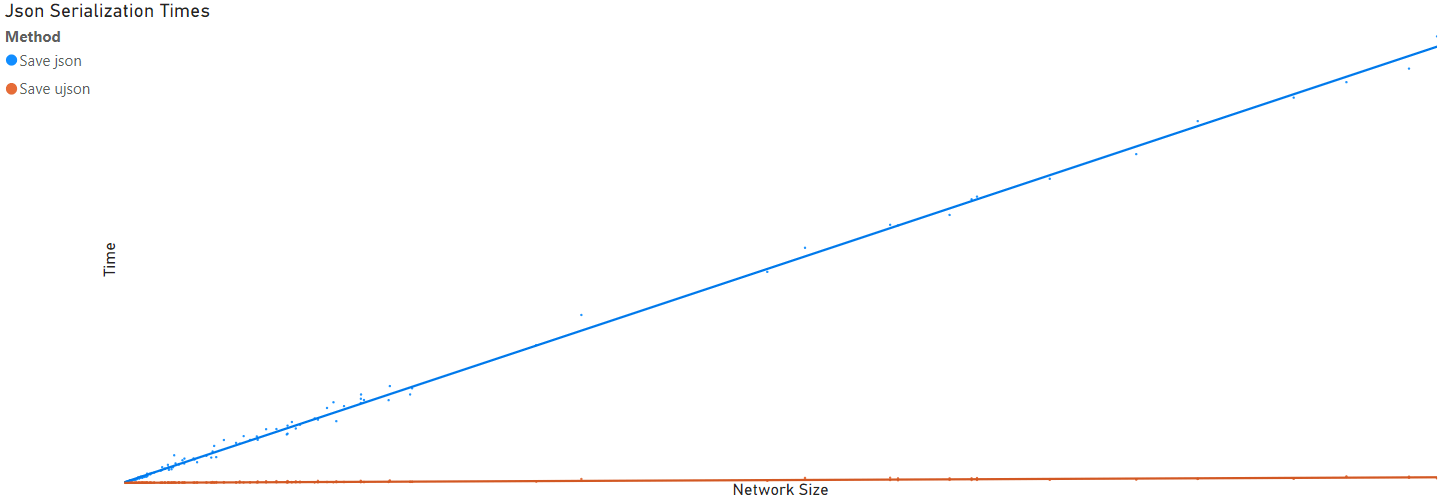

One example of this is the difference between the performance of writing trace results to disk in python using two of the json libraries that are included in the default ArcGIS Environment: the json library and the ultrajson library (ujson). The following shows an example benchmark where one of the library showed a significant advantage over another for a particular interface.

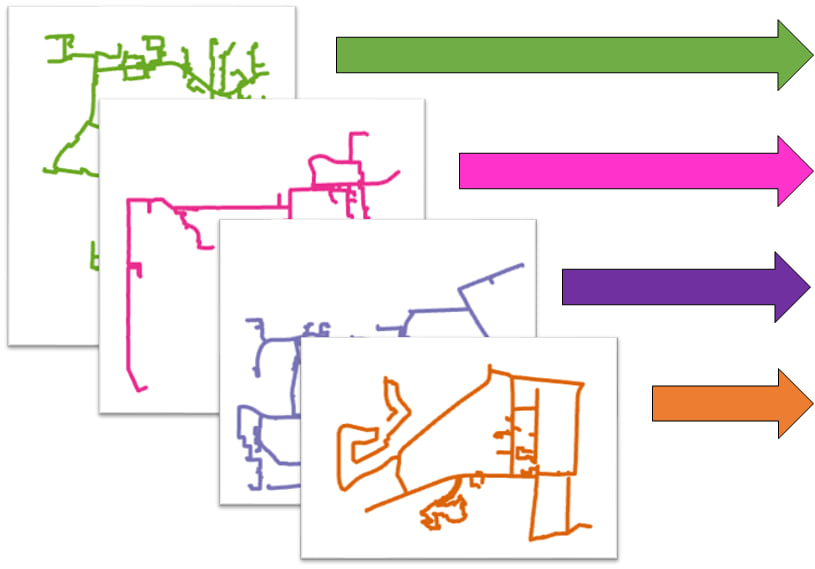

Incremental

Designing an interface to run incrementally is an important technique to make a system scalable. The easiest way to achieve this with a topology is through partitioning. The utility network is designed to partition the topology into subnetworks, which provides an easy way to design your process to run incrementally. The utility network also maintains metadata on the subnetworks that allow you to identify when a subnetwork was last modified. The most basic implementation of incrementalism with subnetworks is to identify and process subnetworks on a regular interval, with consideration given to the last processing interval and the last modified date on the subnetwork.

Another way is to use a polygon boundary or attribute to break your data up, although this makes it harder to use metadata to constrain processing.

When processing data in batches you should be prepared to handle a feature that returned in multiple traces. A feature belonging to multiple result sets may be ok for certain workflows, but it can often be a sign of data problems in networks that don’t allow loops or parallel networks.

Parallel

Implementing parallel processing is never an easy task, but is a powerful final optimization of a system that help an interface fit in a small operating window. It is most effective when implemented in conjunction with other optimizations. All the APIs outlined in the original article support some form of parallel processing, either through threading or by designing batch processes to run concurrently. If you’ve already designed the interface to be incremental then you are already ready to start looking at parallelizing. If not, you will need to design your interface to run incrementally. Now all you need to do is design a way to manage executing the different batches and effectively combing the results from the parallel processes, but we’ll leave that as an exercise for the reader.

Commenting is not enabled for this article.