Monitoring and Maintaining the US Power Grid Relies on Real-Time Geovisualizations

The North American Grid is an interconnected network that generates and distributes electricity throughout the continental United States and parts of Canada and Mexico.

It evolved in stages. In the 1920s, neighboring generator operators began linking their electricity production capabilities together to share peak load coverage and provide backup power for their respective users. In 1936, the US government ratified the Rural Electrification Act, which provided federal funds to create local generating plants in rural areas that previously had no electrical power. Later, the construction of long-distance transmission lines, coupled with the use of frequency converters to link incompatible generators, allowed the creation of a unified electric system throughout the United States.

Today, the North American Grid consists of a complex network of power plants and transformers that are connected by more than 450,000 miles of high-voltage transmission lines. Although monitoring and maintaining the electrical output of different parts of the grid fall to several different entities, they must work synergistically to keep all the lights on. This requires taking real-time measurements of electricity output and usage and making split-second decisions using this big data—which is why one reliability coordinator, Peak Reliability, is beginning to depend heavily on the ArcGIS platform.

A Balancing Act

Because electricity is not stored, it is critical to continually monitor and maintain the balance between demand and generation. The North American Grid is overseen by the North American Electric Reliability Corporation, a nonprofit international regulatory authority that is responsible for the reliability and security of North America’s bulk power system.

Due to the size and complexity of the grid, it is divided into two primary sections, the Western Interconnection and the Eastern Interconnection, as well as three minor ones, the Québec, Texas, and Alaska Interconnections.

The Western Interconnection covers more than 1.8 million square miles and stretches from Western Canada south to Baja California in Mexico and eastward over the Rocky Mountains to the Great Plains to serve more than 80 million customers. It is characterized by long transmission lines connecting remote generation facilities to load centers. The Western Interconnection is managed by the Western Electricity Coordinating Council (WECC).

Serving the electricity coordinating councils are companies known as reliability coordinators that continually monitor the interconnection’s Bulk Electric System (the production resources, transmission lines, interconnections, and all related equipment) to ensure that it remains dependable. Peak Reliability, headquartered in Vancouver, Washington, is the primary reliability coordinator for the Western Interconnection.

“Peak determines the reliability operating limits for transmission operators, balancing authorities, and transmission service providers [working] in the Western Interconnection,” said Dayna Aronson, an enterprise solutions architect for Peak Reliability. “This means that if a company owns and operates transmission facilities, it is required to operate those facilities according to the reliability limits that Peak sets.”

These limits are based on the detailed studies WECC conducts on transmission line capabilities and the network models that Peak continually reviews as it monitors the entire Western Interconnection in real time.

“Since we archive all the real-time data related to the transmission lines throughout the Western Interconnection, we can determine what will happen to any one of the lines if it exceeds our load recommendations, by examining historical data,” said Aronson.

Big Data Runs the Show

While individual power companies model their own transmission networks and the points of interconnection with adjacent power companies, Peak Reliability is the only company in the Western Interconnection that models the entire network. Peak receives network models from all the power companies within the interconnection and then assembles more extensive models using its Energy Management System (EMS), which monitors and optimizes the performance of the transmission system in real time.

“We receive the SCADA [supervisory control and data acquisition] measurements via the Inter-Control Center Communications Protocol (ICCP) from all the individual balancing authorities and transmission operators in the Western Interconnection into our EMS and PI System,” explained Aronson, referring at the end to one component of Esri partner OSIsoft’s suite of software.

Peak has about 440,000 tags (specific electrical load measurements taken at distinct times) in the system, and more than 160,000 of them are SCADA points, or pieces of information—like an open or closed breaker—that are read by a device on the power system.

“We get input from [these] every 10 seconds,” said Aronson. “So the EMS PI archives increase about 5 gigabytes per day.”

In addition, Peak has deployed a synchrophasor network in the Western Interconnection. The phasor measurement unit devices in this network take electrical measurements that make Peak more aware of conditions throughout the grid and help the company respond quickly to anything unusual so it can reduce potential power outages.

“Through this network, we are collecting another 4,000 measurements that are updated 30 times per second, and the phasor archive grows about 64 gigabytes daily,” said Aronson. “In addition, there are another 150,000 elements in our PI Asset Framework that provides data that we are continually monitoring and then archiving.”

All the data generated by these real-time measurements and the 8,000 substations that Peak monitors gets fed into the company’s control room. There, operators continually review more than 13,000 displays of primarily tabular data to look for system anomalies that could lead to interruptions in the grid. On top of these numerous displays, Peak had compatibility issues among several of the systems it had developed or purchased over the years.

Because of the scale of its operations and the need to provide a method for its operators to more easily spot and resolve problems, Peak set out to develop a system based on technologies from Esri and OSIsoft that would allow it to both monitor and visualize its sensor data.

“We began the development of the Peak Visualization Platform (PVP) in late 2015, and it took us about a year and a half to complete,” said Aronson. “The PI Integrator for Esri ArcGIS was implemented to connect OSIsoft’s PI System with the ArcGIS platform to allow the visualization of SCADA and other sensor data within a geospatial context.”

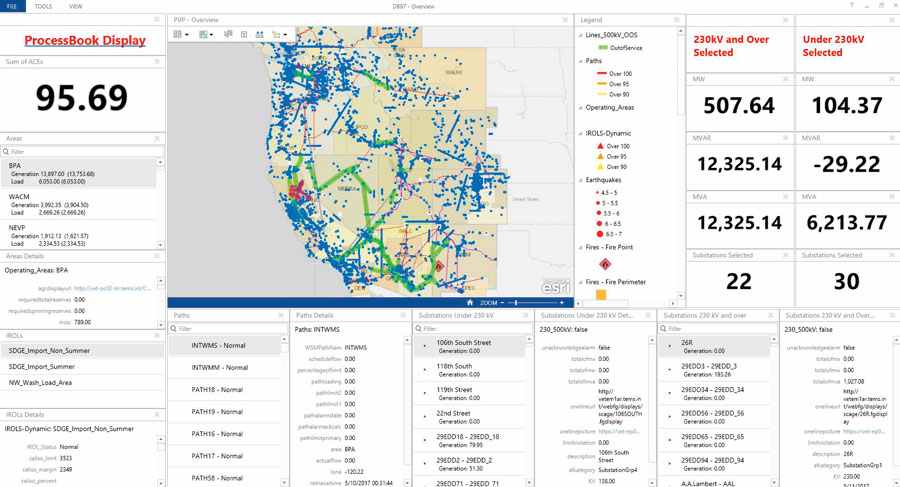

Using its network model and overlaying it with sensor and other grid data, Peak Reliability is employing Operations Dashboard for ArcGIS to visually monitor the grid.

“Essentially, we use the ICCP Protocol to transmit this data to both our EMS and our PI Data Archive application,” said Aronson. “[ArcGIS] GeoEvent Server then pulls that information from the PI archives and hands it off to ArcGIS for mapping purposes, which is how we are getting the real-time sensor data to drive the visualization of the maps in Operations Dashboard. This provides critical situational awareness for our operators when they have to make rapid decisions.”

Learning Lessons in a Fire

As the PVP system was under development, the Blue Cut Fire erupted in Southern California’s San Bernardino County in August 2016. A 500-kV transmission line was short-circuited as a result of the fire, which was anticipated by Peak’s control room operators. However, beyond that, they really didn’t know what to expect.

“They began to call the fire captains out in the field because they needed answers to critical questions about the fire to minimize the risk to the electrical grid,” recalled Aronson. “Some of the questions included: Where was the exact location of the fire? What direction was it moving? What transmission facilities were at risk and should be placed out of service over the coming hours?”

It took more than an hour for the operators to get a response from the field. And once they received the information, the analytics available to them could not provide the necessary geographic context. As a result, 175,000 homes and businesses in the Los Angeles area were at risk of suffering an electricity outage.

Though an outage was actually avoided by re-dispatching power generation to bypass the damaged facilities, that re-dispatch was less than optimal due to the lack of situational awareness. As a result, the local power company paid more money for the power that was redirected to Los Angeles consumers because of the tariffs involved in using electricity from outside the balancing authority.

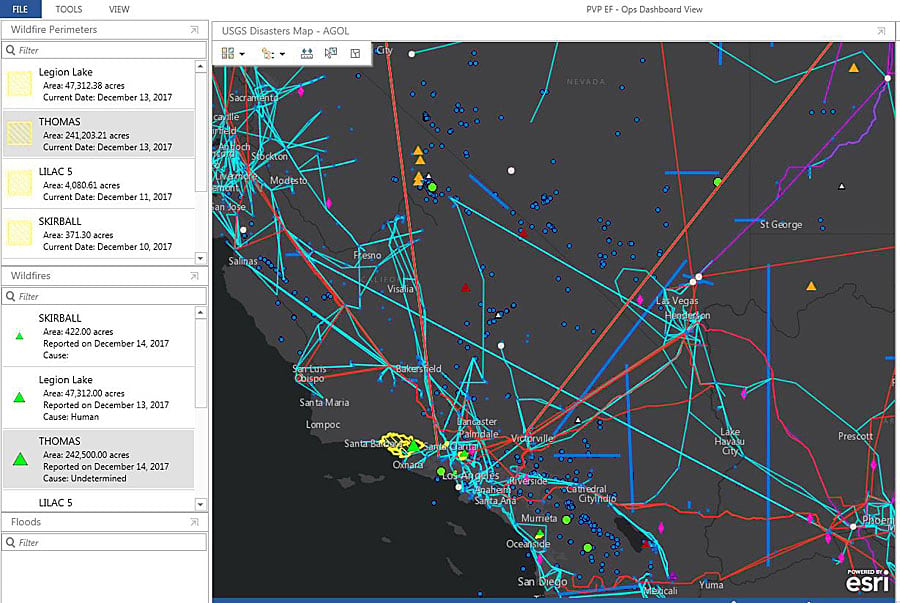

“Now with our PVP, we have real-time fire visualization—including location, size, and boundary data—that is updated every five minutes from the US Geological Survey,” said Aronson. “We can also pull down other information from the Internet that can affect electrical transmission, such as weather forecasts, impending storms, [and] natural disasters.”

Future Visualization Capabilities

“We have only just begun to explore the potential of the Peak Visualization System,” said Aronson. “Originally, we defined about 30 use cases and have so far delivered roughly a third of them for the platform.”

One thing Peak is interested in including in the system is a geospatial context for its Real Time Contingency Analysis (RTCA) displays, which provide a five-minutes-ahead, what-if scenario analysis of possible grid conditions.

“The RTCA application simulates more than 8,000 contingencies, which it prioritizes based on their potential negative impact on the operation of the grid,” explained Aronson. “We are going to georeference the RTCA results and superimpose them over the topology of the network to give the control room operators the locational intelligence needed to make quick and well-considered decisions in the event of a facility failure, negative contingency, or other event that threatens the reliability of the grid.”