This blog will describe few ways in which Object Tracking can be used to get the most out of Full Motion Video data. It will outline the upcoming Object Tracking capability in ArcGIS Pro 2.8 and ArcGIS API for Python.

Problem Statement

Full Motion Videos can be used to track a specific object captured through aerial cameras and extract useful information such as its location. Often analysts opt to accomplish this task manually. This task can be automated to a great extent using the upcoming Object Tracking capability in ArcGIS Pro 2.8 and ArcGIS API for Python.

Object Tracking in ArcGIS Pro

First, we’ll understand how we can use Full Motion Video Extension in ArcGIS Pro to analyze videos and capture critical information which can be saved directly to a geodatabase.

In this example, our goal is to track the truck as it moves along the road.

We can use Annotate Points tool to drop points on the truck. The map on the right shows the mapped geographical coordinates of these points along with the trajectory of the camera.

Using Deep Learning Model

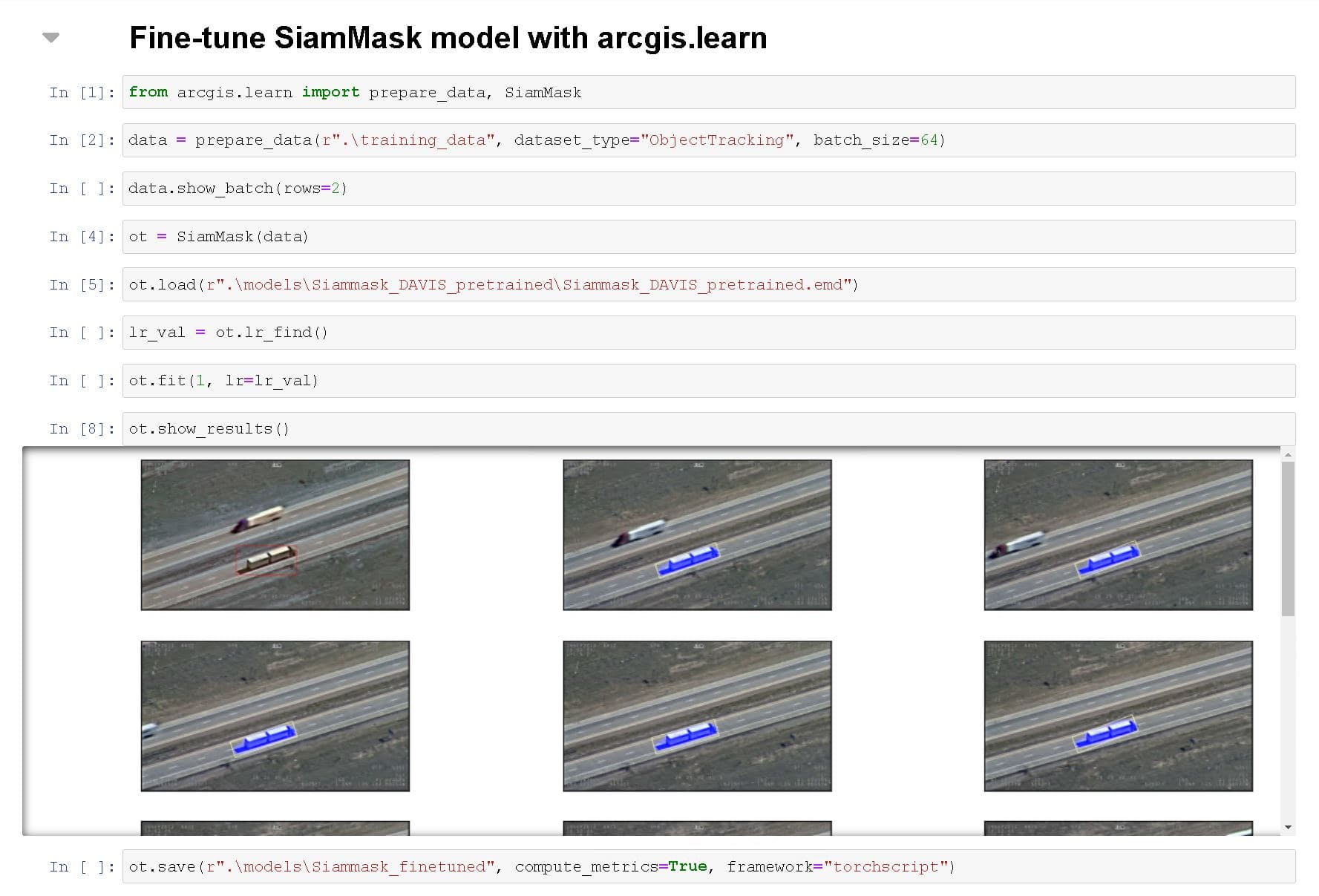

In ArcGIS Pro 2.8, there is support for deep learning-based object tracking models which can automate the workflow mentioned above to a great extent. One such model is SiamMask, which has been recently added to the arcgis.learn module of ArcGIS API for Python. The rest of this blog will go through the steps showing how the model can be used.

We can pick a pre-trained object tracking model from the Living Atlas,

or use arcgis.learn which allows us to fine-tune a pretrained model using data, specific to our use case.

The learn module has APIs to prepare data for training, load an existing pre-trained model and fit the model. Once the model has been trained, it can be converted to a format which can be used in the Object Tracking tool in ArcGIS Pro.

Using Object Tracking Tool

To load the pre-trained/trained model, we can use the Tracking Pane under Tracking tab.

To add the object to track, we can use Add Object tool. The Object to Feature tool automatically drops points on the map as the object moves, while saving the data to a geodatabase.

The tool additionally has intelligence to detect track failures owing to challenging circumstances. In case of track failures, there is a built-in mechanism to attempt recovery of the lost object.

However, there might arise a need to reposition the object in few cases even after the object is successfully recovered. We can easily do so using the Reposition Object tool and continue tracking the object.

Object Tracking in ArcGIS API for Python

So far, we have seen how we can track an object of interest. Let us now look at another capability which enables simultaneous use of Object Detector and Object Tracker for certain applications.

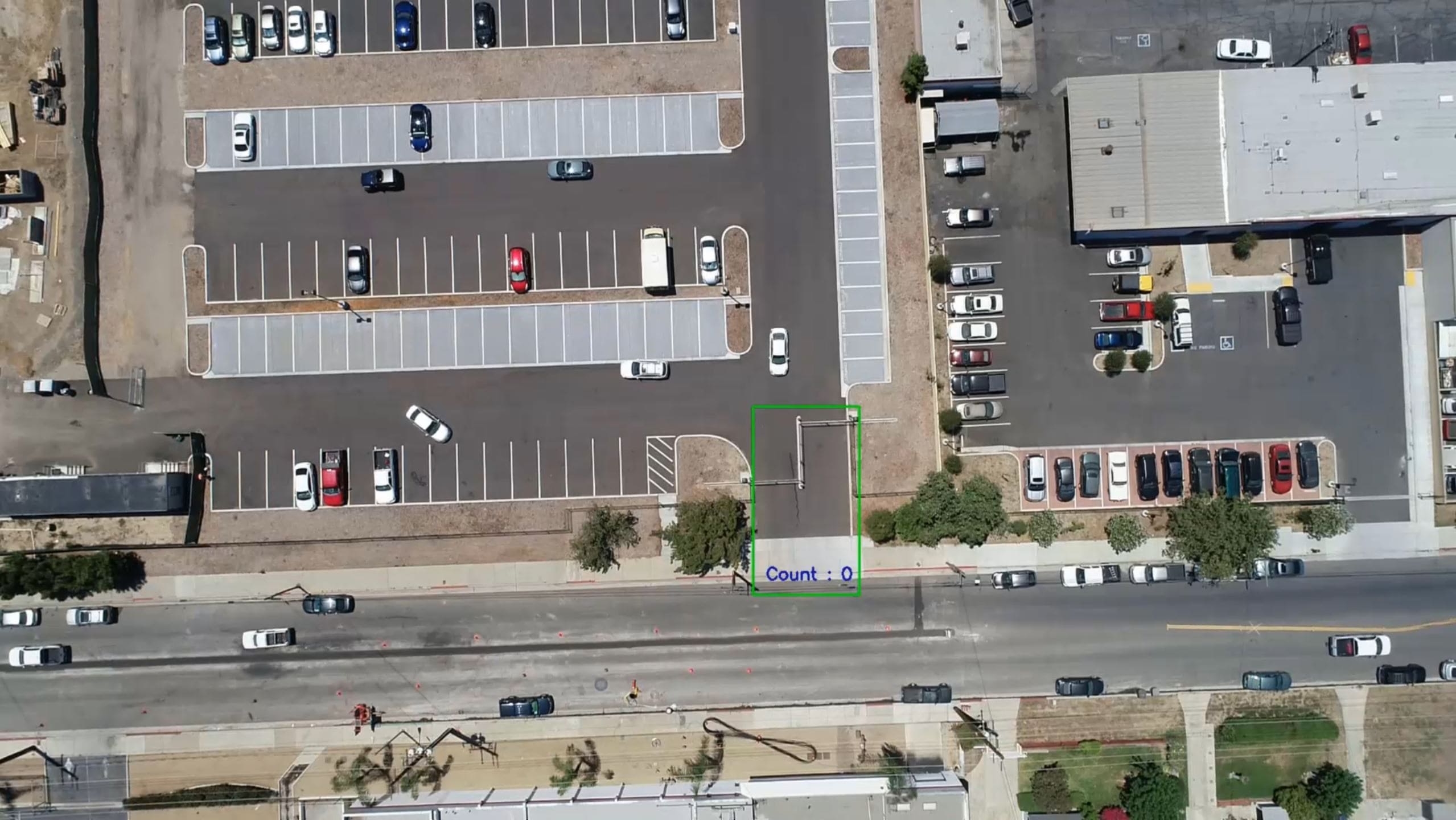

For example, the green box in the image below represents a region of interest near the entry-exit gate of a parking lot. Our goal is to estimate the number of cars passing through this region.

ObjectTracker class in arcgis.learn helps us pair Object Detection models such as Retinanet and Object Tracking models such as SiamMask. After reading frames from a video using OpenCV, we detect cars in the region of interest and track them. The total number of tracks helps us arrive at the final count of cars.

Conclusion

The two capabilities described in this blog demonstrate the power of Object Tracking in automating critical business workflows. To delve deeper into these capabilities and to get started, please refer the links below:

- Object tracking in motion imagery—ArcGIS Pro | Documentation

- Full Motion Video player—ArcGIS Pro | Documentation

- Introduction to Full Motion Video—ArcGIS Pro | Documentation

- Install deep learning frameworks for ArcGIS—ArcGIS Pro | Documentation

- Deep learning in ArcGIS Pro—ArcGIS Pro | Documentation

- ArcGIS API For Python | ArcGIS for Developers

- learn module — arcgis 1.8.5 documentation

Article Discussion: