Satellite imagery can contain a wealth of information, from the number of buildings in a city to the type of crops being grown in fields across the world. But extracting this data from an image is more complicated than working with vector datasets. Historically, to extract the buildings, or swimming pools, or palm trees in an image you would have needed to manually digitize each feature, a process that could take weeks or years depending on the size of the image. But with improvements in computing power and new, accessible tools for deep learning in ArcGIS Pro, anyone can train a computer to do the work of identifying and extracting features from imagery.

At the highest level, deep learning, which is a type of machine learning, is a process where the user creates training samples, for example by drawing polygons over rooftops, and the computer model learns from these training samples and scans the rest of the image to identify similar features. This blog post will be the first in a three-part series diving deeper into the process, starting with the software and hardware requirements to run a deep learning model in ArcGIS Pro.

Deep learning prerequisites

Deep learning is a type of machine learning that relies on multiple layers of nonlinear processing for feature identification and pattern recognition, described in a model. Deep learning models can be integrated with ArcGIS Pro for object detection, object classification, and image classification.

There will be many acronyms used in this blog, so we have defined them here for your reference:

GPU: graphics processing unit

CPU: central processing unit

RAM: random access memory

CUDA: Compute Unified Device Architecture

DLPK: ArcGIS Pro deep learning model package

Inferencing is the process in which information learned during the deep learning training process is put to work detecting similar features in the datasets. ArcGIS Pro uses an external third-party framework and model definition file to run the inference geoprocessing tools. Model definition files and (.dlpk) packages can be used multiple times as inputs to the geoprocessing tools, allowing you to assess multiple images over different locations and time periods using the same trained model.

Pretrained deep learning packages (dlpks) are becoming more readily available as the trend of deploying deep learning workflows shifts from complex Python scripts to out-of-the-box tools. In this blog series, we will cover the prerequisite steps and some tips and tricks to run a seamless deep learning inferencing process. We will also dive into configuring the key parameters for those who are interested in understanding best practices in deploying deep learning tools.

This blog series will cover a step-by-step workflow that will help you run a deep learning exercise in ArcGIS Pro. We will also go over some essential checkpoints to ensure that you can run the tools without facing any unexpected errors.

Before we can train or run any models, we need to ensure that your environment is set up to perform deep learning. This post will walk you through the following checklist:

- GPU availability, specifications, and compatibility

- CUDA installation and CUDA prerequisites

- ArcGIS Pro availability and version

- Deep learning framework for ArcGIS Pro installation

- Communicating with the GPU

After we have ensured the prerequisites above are satisfied, we will dive into the best practices when running the out-of-the-box deep learning tools.

GPU availability, specifications, and compatibility

A graphics processing unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles. Modern GPUs are very efficient at manipulating computer graphics and image processing. Their highly parallel structure makes them more efficient than general-purpose central processing units (CPUs) for algorithms that process large blocks of data in parallel. Denny Atkin. Computer Shopper: The Right GPU for You. Archived from the original on 2007-05-06. Retrieved 2007-05-15.

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by Nvidia. Nvidia CUDA home page. It allows software developers and software engineers to use a CUDA-enabled GPU for general-purpose processing—an approach termed GPGPU (general-purpose computing on graphics processing units). The CUDA platform is a software layer that gives direct access to the GPU’s virtual instruction set and parallel computational elements, for the execution of compute kernels. Abi-Chahla, Fedy (June 18, 2008). Nvidia’s CUDA: The End of the CPU?. Tom’s Hardware. Retrieved May 17, 2015.

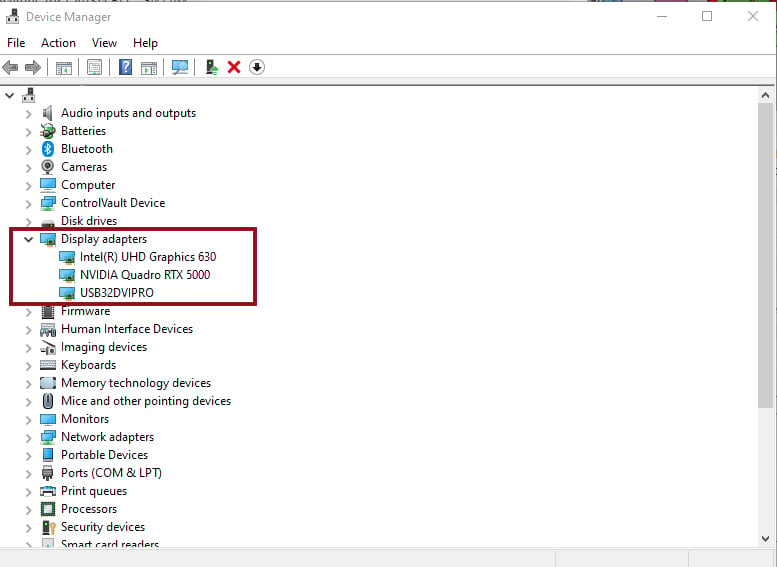

The most essential prerequisite for successfully executing deep learning is having the right GPU (Note that you can run this workflow on your machine’s CPU, but the process will be significantly slower and will drain the CPU). To check the available GPU and see what you are working with, follow these steps:

- Open the start menu on your machine, type Device Manager, and press Enter.

- Locate the Display adapters option, and click the drop-down arrow to expand it.

- Locate the NVIDIA driver in that drop-down list.

This is the first checkpoint for a successful deep learning environment setup. If you have a NVIDIA GPU on your machine, the next question will be, is your GPU CUDA compatible? If you don’t have NVIDIA GPU, you can run this workflow using your CPU, but this is not recommended. It will take longer to run through the tools and the workflow is CPU-draining. The best solution is to locate a machine with an NVIDIA card.

A lot of machines come with built-in GPU, but to work with deep learning tools in ArcGIS Pro, you need a CUDA-compatible Nvidia GPU. This is essential to proceed to the next steps of setting up your environment. To figure out if your GPU is a CUDA-supported GPU, check the referenced GPUs supported Wikipedia page. For this workflow, we recommend NVIDIA GPUs with CUDA compute capability of 6.0 (in other words, Pascal architecture) or newer, with 8 GB or more of dedicated GPU memory. Note that there are certain models and architectures that require much more GPU memory. Check GPU processing with Spatial Analyst for more GPU and ArcGIS Pro insights and information.

CUDA installation and CUDA prerequisites

Next, you need to install the needed CUDA libraries and dependencies. As mentioned earlier, CUDA allows the user to use a CUDA-enabled GPU for general-purpose processing. In the case of deep learning, it will enable the geoprocessing tools to communicate with the GPU. The Nvidia website provides a detailed documented guide on how to install CUDA. Below are the system requirements for CUDA as referenced by Nvidia documentation.

To use CUDA on your system, you will need the following installed:

- A CUDA-capable GPU

- A supported version of Microsoft Windows

- A supported version of Microsoft Visual Studio

- The NVIDIA CUDA Toolkit

Don't worry about installing the right Nvidia driver on your machine- as you go through the steps of installing CUDA, it will install any missing drivers automatically.

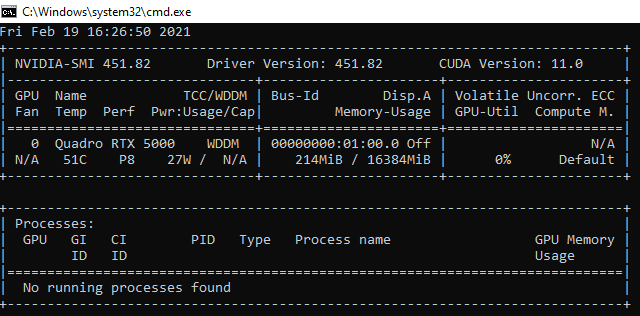

Once the steps above are completed, open a command line prompt and type nvidia-smi. You should see a response similar to the following screen capture:

ArcGIS Pro availability and version

At this stage, you should have a ready-to-perform GPU. You will now move to setting up your ArcGIS Pro environment. First we need to determine what ArcGIS Pro version and extensions you have:

- If you have ArcGIS Pro installed, skip to step 4. If not, sign in to your My Esri

- Browse to My Organizations > Download >, select ArcGIS Pro, and download it.

- Browse to Licensing and download your ArcGIS Pro License with Image Analyst license.

- Open a new instance of ArcGIS Pro.

- Click Settings.

- Browse to About in the menu on the right side

- Under Product Information, make sure you are running the latest ArcGIS Pro version. The current version when writing this post is 2.7.

- Close ArcGIS Pro when done.

Note that the deep learning workflow documented here is also supported with ArcGIS Pro 2.6.

Deep learning framework for ArcGIS Pro installation

Once you are done installing/updating ArcGIS Pro, you will be ready to install the dependency libraries within the Python environment. Browse to deep learning libraries installers for ArcGIS. If you want to install the deep learning libraries in your default Python environment, complete the first set of steps below. If you want to preserve your default arcgispro-py3 environment and run the deep learning tools on a dedicated new environment, skip to the section ‘Installing the dependencies on a cloned Python environment’.

Installing the dependencies on the default Python environment

- Browse to the Download section and click Deep Learning Libraries Installer for ArcGIS Pro 3.1 or any compatible version.

- Locate the downloaded zip file on your local machine and extract it to a desired place.

- Run the installer.

Testing CUDA in DL Environment

The last step in getting your deep learning environment ready is testing what you deployed in the previous steps.

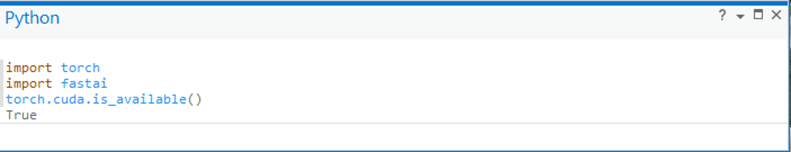

- Start ArcGIS Pro and create a project.

- Click Analysis on the main ribbon > under the Geoprocessing section, click the drop-down arrow next to Python > click Python Window.

- In the opened Python window, run the below code snippet:

After completing the steps above and verifying that torch.cuda.is_avaialble() is returning True, your deep learning environment is ready and you can move to the first step: inferencing.

The second blog post in this series (Deep Learning with ArcGIS Pro Tips & Tricks: Part 2) covers the different types of deep learning models and provides a step-by-step guide to running deep learning in your new environment.

Article Discussion: