A constant challenge for many administrators of ArcGIS Server sites is to balance available machine resources with the demands of site traffic. When you host many services, whether they are consumed publicly or within your organization, the resource usage from service requests can grow and grow.

At the same time, the services in your ArcGIS Server site likely don’t all receive equal levels of requests – some services are constantly working, while others sit unused for long periods.

At 10.7, released last week, ArcGIS Server introduced a new solution to these resource issues. It’s called the shared instance pool.

We think it will have positive benefits for ArcGIS Server administrators and users, and we plan to expand this capability’s scope in future releases.

Instances in an instant

Service instances can be difficult to explain in words alone, so this blog will use animated diagrams to illustrate what we’re talking about. We’ll start with the humble gym sock.

ArcGIS Server performs all of its work through the use of active service instances. An instance is executed by a computer process called an ArcSOC – hence, the sock.

When a client app makes a request to any service running on your ArcGIS Server site, such as to draw a map, geocode a data set, or run a geoprocessing job, that request is passed to an ArcSOC process on one of the machines in the site. The process will execute the request and return the result.

ArcSOCs are designed to be fast, powerful, and versatile. Users should not notice them – they just expect that when they drag and pan a map or search for an address, the system will promptly respond.

But each ArcSOC process can only handle a single request at a time. This presents a problem when a service receives multiple simultaneous requests: If an ArcSOC process is handling one request, then is assigned another, the second request must wait its turn.

This manifests to the user as a performance delay – a hanging geoprocessing job or a map that’s slow to zoom.

For this reason, service instances and services are not one-to-one. Instead, each service has its own dedicated instance pool, with a defined minimum number and maximum number of service instances. The ArcGIS Server site will scale the number of active ArcSOC processes up or down within this defined range, depending on current traffic.

The default range for map services is a minimum of one and a maximum of two instances. If I leave this range as is for my two services below, and the first service is receiving two simultaneous requests, a second ArcSOC process will be powered up for that service.

Finding a balance

There’s an important reason that ArcGIS Server doesn’t just provide infinite service instances to handle all possible traffic: Each instance takes up a measurable portion of the computing resources on its machine.

Specifically, the instance takes up a certain amount of memory, often on the order of 100-200 MB of RAM.

This may not present an issue if your site runs a small number of services. But when a site serves out dozens or hundreds of services, that begins to present a resource usage problem – particularly if many of those services receive relatively little traffic.

You don’t want to restrict the amount of active service instances so as to throttle the performance of your site. But you also don’t want to tax your system with a high number of instances if many of them are going unused.

Let’s say for simplicity’s sake that you have two services, and that you’ve determined your ArcGIS Server machine can only handle six ArcSOC processes at any given time, based on its available memory.

If the first service is constantly receiving simultaneous requests, but the second is sitting all alone without any traffic (poor sock!), you would want to free up the memory being consumed by the second service’s ArcSOC process and reallocate it. The first instance could then use an additional instance for its purposes.

The old way

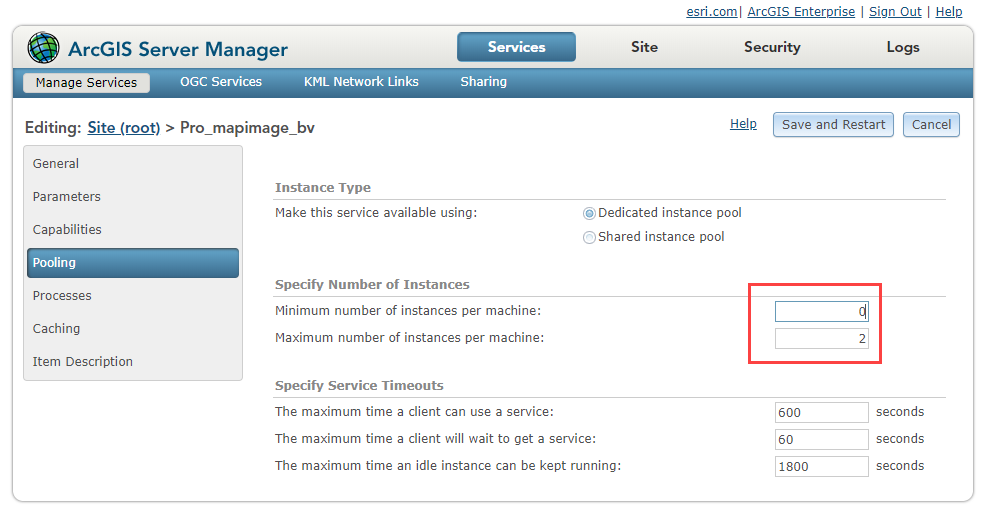

Prior to 10.7, the solution for doing so was to set the minimum number of instances in that second service’s dedicated pool to zero, as seen below in ArcGIS Server Manager. By doing so, you allow ArcGIS Server to not run any ArcSOCs for the service if it hasn’t received any requests in a while.

(The amount of time that must elapse with an instance not handling any requests before it’s powered down is governed by the Idle Timeout property, which can also be specified in Manager.)

This “min-zero” solution eliminates the resource usage for services that are going unused. Because you can still set the maximum number of instances, you can accommodate services that receive infrequent bursts of traffic (such as a winter-weather map, which has little utility during the summer).

The next time the service receives a request, an ArcSOC will be powered up to handle it.

Meanwhile, your high-traffic services can continue to function with multiple ArcSOC processes.

That’s the beauty of having dedicated instance pools – each service can be configured to provide as many instances as are needed to handle its traffic, without affecting other services’ pools.

There’s a problem with this approach, though. ArcSOCs take some time to warm up when they are called into action. The unlucky user who first makes a request to a service that’s been running zero instances will receive a performance delay.

This might mean their map takes ten seconds to load a new area – certainly an inconvenience, and sometimes an unacceptable impediment. We call this the “cold start problem.”

In addition, if the service just receives that one burst of activity, the ArcSOC process that’s been powered up to handle it will then sit – taking up its portion of the machine’s memory – until the Idle Timeout property kicks in.

Even if you set half of your services to the “min-zero” setting, the number of idle instances consuming memory on your machine can really add up – not to mention the “cold start” problem this will pose to your users.

Therefore, we want to have a configuration where we can isolate our high-traffic services with the resources they need, while facilitating the infrequent traffic of our other services in a way that doesn’t idly consume memory or present a performance lag.

A new option to optimize instances

That’s where shared instances come in.

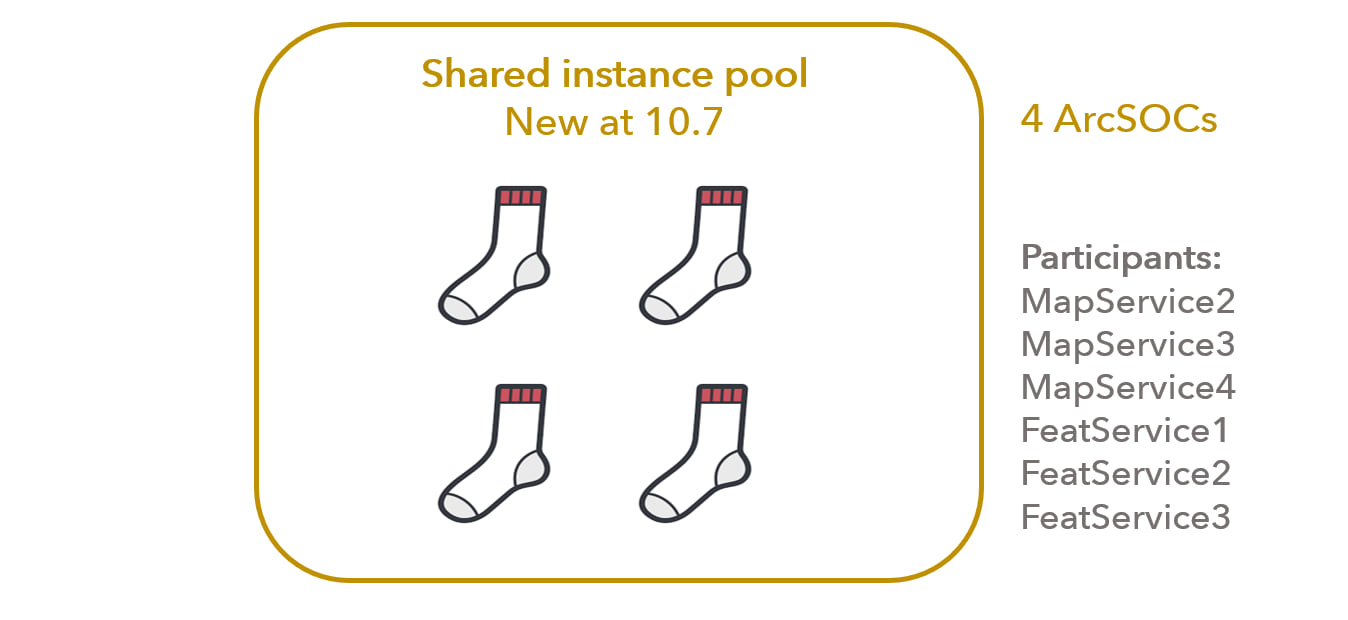

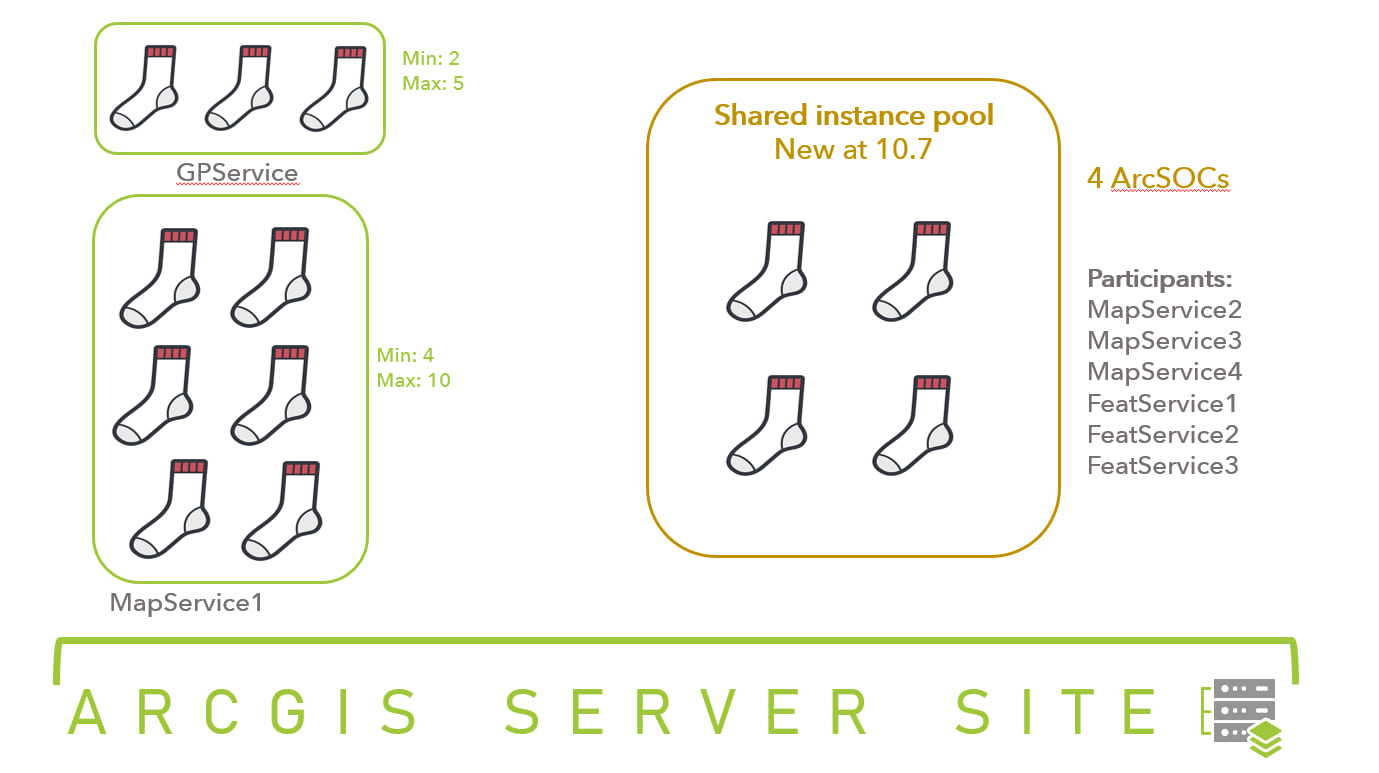

At 10.7 and later, every ArcGIS Server site now comes with a shared instance pool, containing four ArcSOC processes by default. Once a compatible map service has been published to your ArcGIS Server site, you can designate it to use the shared pool.

The service will no longer have its own dedicated pool; it will instead dip into the shared pool and use an ArcSOC or two as needed. Once it’s done handling a request, that ArcSOC is free to be used by any other service in the shared pool.

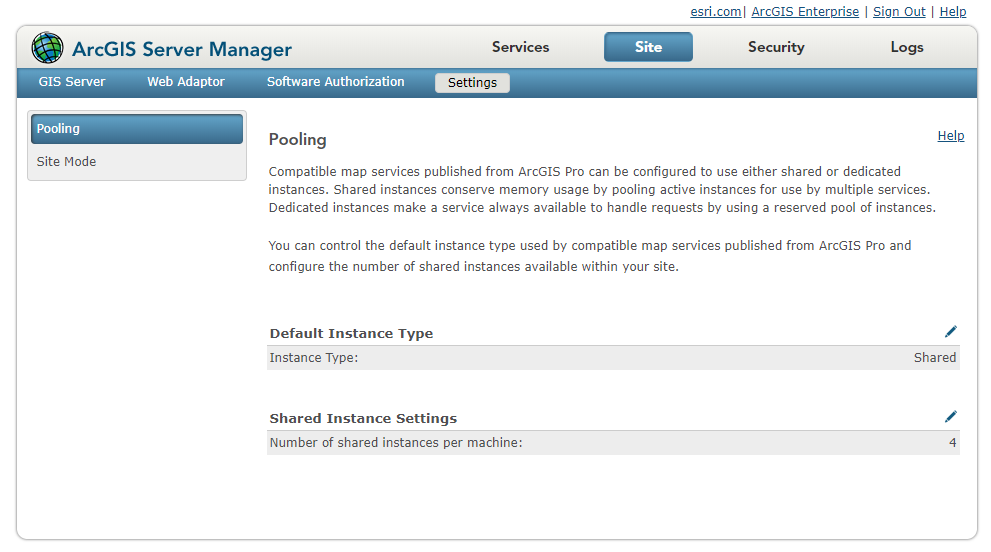

As with dedicated pools, this capability can be customized for your needs. Using the Pooling page in ArcGIS Server Manager, you can specify the default instance type for compatible services to be either dedicated or shared; if it’s the latter, all compatible services will use the shared pool by default.

You can also change the number of ArcSOCs running in the shared pool at any time. Unlike the minimum-to-maximum range you set for dedicated pools, the shared pool runs a fixed number of instances.

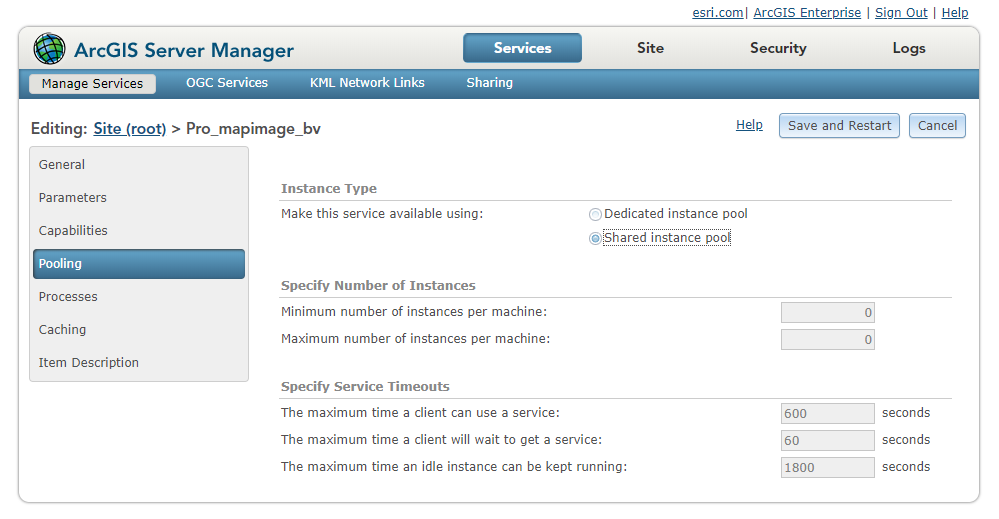

To designate an individual service to use the shared pool, open up the service’s Edit page in ArcGIS Server Manager. Navigate to the Pooling tab, and click the button to Make this service available using: Shared instance pool. Click Save and Restart to apply your changes. You can change a service’s instance type at any time.

A service must meet certain criteria in order to use the shared instance pool. If it doesn’t, the option will be greyed out in ArcGIS Server Manager. These criteria apply:

- Only map services, including feature services, can use the shared pool.

- Only certain capabilities of map services—feature access, WFS, WMS, and KML—can be enabled. You can use the Capabilities tab to turn off any incompatible capabilities.

- Services published from ArcMap cannot use shared instances.

- Cached map services published from ArcGIS Pro that meet the above requirements can use shared instances.

Why the shared pool helps

Shared instances provide a dual solution: They resolve the issue of unused services consuming unnecessary memory resources on your machine, without presenting a “cold start” to the user who makes the first request to an unused service.

But they aren’t just a replacement for the “min-zero” configuration – they are a cost-effective option for any compatible service that does not need to constantly handle multiple requests. (The definition of “constant traffic” depends on your site and its available resources – we often consider fewer than one request per minute to be “infrequent.”)

And while you configure those “infrequent traffic” services to use the shared pool, you can continue to give your other services their own dedicated pools with as many instances as they need. You’ll still be able to guarantee high availability for your services that are always in demand or are under service-level agreements.

Let’s see those socks one more time, now with our shared pool alongside the high-traffic dedicated pools.

Hop in the pool

The shared pool can drastically reduce the overall number of instances you need to run in order to meet your services’ traffic needs. That makes it easier to strike the optimal balance between site performance and memory usage, without as much administrative overhead.

Everything you need to know about service instances and the new shared pool is available in the 10.7 documentation: Configure service instance settings. You’ll find more best practices there on how to optimize your site’s deployment of service instances.

We hope this post has helped transfer some of our enthusiasm for shared instances and ArcSOCs to you! Enjoy ArcGIS Enterprise, and keep on sockin’.

Article Discussion: