The ArcGIS Enterprise team is hard at work finishing up our latest software release and newest product offering: ArcGIS Enterprise on Kubernetes.

In coming weeks, this entirely new implementation of ArcGIS Enterprise, the industry-leading geospatial platform, was designed on a new microservices architecture and will be available with the 10.9 release.

From the onset of development, our guiding principles have been to deliver a system that provides world-class geospatial services, serves as a content authoring and collaboration environment, is highly scalable and easy to maintain.

We’re thrilled to share and showcase many aspects of this new architecture and design from its deployment to configuration and ongoing maintenance.

Here, we’ve provided an overview of today’s demos and have accompanied them with a sneak peek behind the scenes. We also wanted to provide you with background on some of our design decisions for this exciting new option to ArcGIS Enterprise. Enjoy!

Deploy, configure, and use

Throughout the development cycle and certainly while designing the architecture, we put ourselves in the shoes of an IT administrator who’s been tasked to deploy and maintain ArcGIS Enterprise on Kubernetes.

Our aim was to keep things simple and so it became our mantra to deploy, configure, and use.

In our first demo, Markus provides an overview of the steps to deploy, configure, and use ArcGIS Enterprise on Kubernetes. Markus shows how to deploy a production-ready and highly available geospatial environment for his organization.

You may have noted that before getting started, Markus had already provisioned his Kubernetes cluster, a prerequisite to deployment.

While we’ve carefully designed this offering to be agnostic of a specific Kubernetes provider, we also wanted to ensure we’re delivering an experience that we can fully test and support. For our initial release, ArcGIS Enterprise on Kubernetes is supported on three Kubernetes cluster providers, however this list will grow over time:

- On-premises data center

- Red Hat OpenShift Container Platform

- Managed Kubernetes services on the cloud

- Microsoft Azure Kubernetes Service (AKS)

- Amazon Elastic Kubernetes Service (EKS)

Once you’ve provisioned a cluster in one of these environments, you’re ready to deploy.

When using the Esri provided deployment script (./deploy.sh), you can run it interactively (recommended for the first time) or silently using a file-based configuration. The latter is recommended for subsequent deployments when creating replicas that you can version control.

While the script runs, it communicates with the cluster and creates an initial set of foundational pods (in a namespace of your choice) including an ingress controller, an ArcGIS Enterprise Administrator API, ArcGIS Enterprise Manager, and help documentation.

Once the deployment script has finished and an initial set of pods are in place, the next step is to configure the organization. In this step, the ArcGIS Admin API orchestrates the creation of remaining pods from hosted data stores to apps.

You can initiate configuration from a script (./configure.sh) or a setup wizard should you prefer using a browser-based interface for this step. Configuration is asynchronous and the API returns status messages until configuration is complete. The organization is then ready to use, and the administrator can populate it with members and content.

The deployment and configuration steps require minimal input and provide recommended system defaults. You can provide input to these steps using a properties file that can then be version controlled and easily replicated.

These shell scripts will work on any client machine with support for Bash scripts. Careful consideration was given to the credentials needed to operate ArcGIS Enterprise on Kubernetes by using Kubernetes Role-based access control (RBAC) with just the right set of privileges.

Perform updates and upgrades

In this demo, Shreyas walks us through the process to apply an update in ArcGIS Enterprise on Kubernetes. Updates consist of upgrades (a new software release) and updates (a new patch) and enable administrators with a seamless opportunity to acquire new features and enhancements as soon as they’re available.

During development, our primary focus here was to greatly reduce the time commitment and workload imposed on IT administrators to maintain and upgrade the system.

In turn, we’re delivering the software as a set of containers and a software-driven update process that will streamline this essential workflow and save time. In addition, we’ve designed this process with automation in mind.

In ArcGIS Enterprise on Kubernetes, all software, including updates and upgrades, is container-based and delivered via a container registry. With each new update or software release, Esri’s CI/CD pipelines will build new container images and push them to the container registry. Along with the container images is an Esri-hosted manifest that describes the update and includes a list of required containers for the update.

The Admin API connects to the Esri-hosted manifest to determine when new updates and releases are available. An administrator can query the Admin API programmatically to detect new updates.

Alternatively, administrators using ArcGIS Enterprise Manager are alerted to new updates directly from the app. The process to apply an update is as simple as pressing a button in ArcGIS Enterprise Manager or by invoking the Admin API.

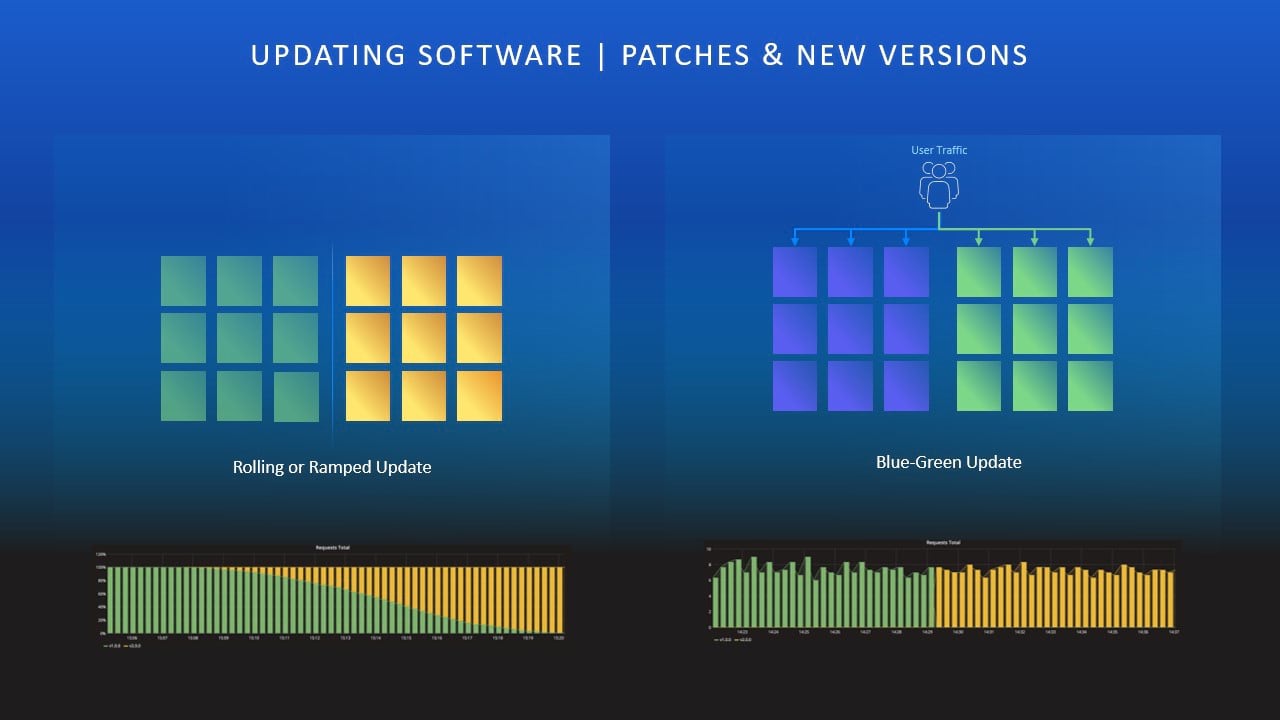

An update engine is built into the administrative tools. Once an update is available, the Admin API reads the manifest to determine which pods need to be updated. Depending upon the pod type, an appropriate update strategy is applied. For example:

- Blue-green: This strategy is applied for StatefulSets, like those implemented by hosted data stores. Where applicable, new Kubernetes deployments are started using the new container images to upgrade and migrate the underlying data. Secondary data store instances or other replicas are then added to the data store. Once the new data store is deemed healthy, the old data store is shutdown, leaving only a healthy instance of the new data store.

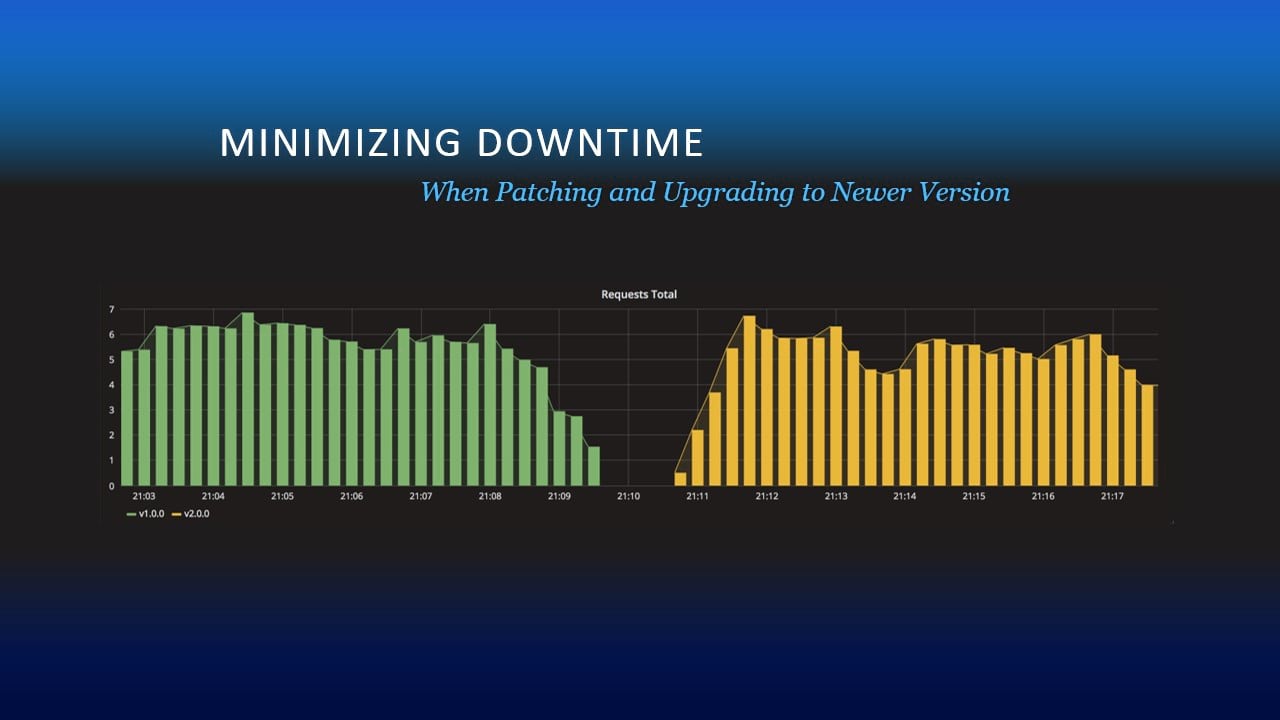

- Rolling: This strategy is used for stateful Kubernetes deployment objects, such as GIS services. As new pods powered by new container images are deployed, the old pods are shutdown in a rolling manner. This approach aims to minimize downtime for your updates.

Scale services

GIS services and their data are the foundation to an enterprise geospatial platform. They power mission critical apps and workflows and empower decision makers to effectively plan the best course of action.

We understand that every service request matters and it’s imperative for administrators to know when systems are healthy and performing at their expected service level agreements (SLAs), and perhaps more importantly, when they’re not.

Next, we’ll describe how administrators can monitor and manage services in their organizations using ArcGIS Enterprise on Kubernetes.

To provide monitoring tools out of the box, ArcGIS Enterprise on Kubernetes includes Prometheus, a popular tool to collect metrics and Grafana, a metrics visualizer to view the results.

As requests arrive and flow through the system, key metrics are collected from the various pods and scraped by Prometheus. The metrics can be queried using the Prometheus query language (PromQL).

While raw data is powerful, you can use Grafana to author sophisticated dashboards that allow you to slice, dice, and visualize the data and to understand the health of your system and services.

GIS services in ArcGIS Enterprise on Kubernetes can be configured to run in two modes:

- Shared – service runs using shared resources with other services in this mode (CPU and memory).

- Dedicated – service runs using its own dedicated resources.

At 10.9, map services and hosted feature services can be configured to run in shared mode. Dedicated mode is supported for dynamic map services, feature services, and system geoprocessing services, and is ideal for services that require very specific resource control limit or those with SLAs.

Administrators can use ArcGIS Enterprise Manager or Admin API to control the number of service pods as well as the CPU and memory associated with each.

Our goal is that such fine-grained resource management will allow administrators greater control of their overall system and service health.

By combining the two capabilities of service usage metrics with fine grained resource control on pods, administrators can deploy very powerful auto-scaling workflows to assure that a service is able to meet its SLA and adapt to workflows instantaneously.

While Shreyas’ demo depicts a manual workflow – it screams automation. You can use a script to poll average service response times and input those values to simple routines that can then determine trends.

If average response times increase, meaning the service is unable to handle incoming load over a certain period, a trigger can be created to adjust the scale of pods using the Admin API.

In this way, using automation and in-built capabilities of powerful service usage metrics, administrators can scale their GIS services (up or down) to meet their SLAs.

In closing, we hope it’s clear – we’ve designed and built ArcGIS Enterprise on Kubernetes with administrators in mind. We hope to empower you as administrators to monitor and maintain your geospatial infrastructure with minimal effort and in turn, open endless possibilities for your organization.

Commenting is not enabled for this article.