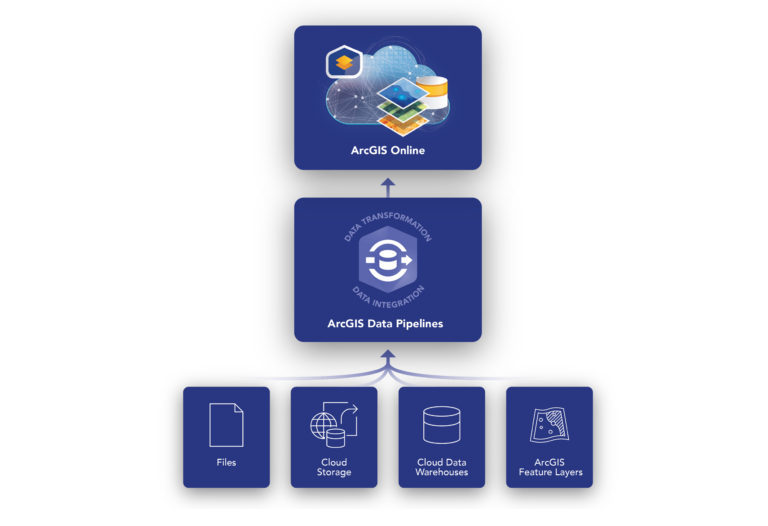

Effective geospatial analysis relies heavily on accessing, integrating, and preparing data from a variety of sources. This can be a time consuming and tedious task, made even more complex when the source data updates on a regular basis. ArcGIS Data Pipelines provides a comprehensive solution to this challenge, and we are excited to announce that it is officially out of beta and available for general use in ArcGIS Online.

As a native data integration capability, Data Pipelines offers a fast and efficient way to ingest, engineer, and maintain data from a variety of locations, including multiple cloud-based data stores. The drag-and-drop interface minimizes the need for coding skills and simplifies the data integration and preparation process. In addition, the scheduling functionality automates data updates to ensure that your data remains current as the source data evolves.

In this blog we will explore the recent release enhancements and new tools, and how they streamline data integration workflows. Keep reading to learn how Data Pipelines can unlock the full potential of your geospatial data!

What’s New in ArcGIS Data Pipelines (February 2024)

In the February 2024 update, Data Pipelines has all the features you may know from beta, and more! To learn more about the beta releases of Data Pipelines, see the Introducing Data Pipelines in ArcGIS Online (beta release) and the What’s New in Data Pipelines (October 2023) blog posts.

New features in this update include support for JSON files, new data engineering tools, plus new experiences and dialogs in the application. Check out the video demo and details below for more information.

Guided tour of the Data Pipelines editor

Get started with Data Pipelines by taking a quick guided tour of the editor. The tour assists you in learning the key concepts and features of Data Pipelines before you begin building your data integration workflows. You’ll see the tour appear automatically the first time you log in. After that you can access it at any time from the editor toolbar.

JSON files and complex field support

Data Pipelines now supports JSON files from Amazon S3, Microsoft Azure Storage, and Public URL inputs. Note that JSON files are not yet supported for uploading directly to your content and therefore cannot be used as a File input.

There are also new tools and enhancements for working with the complex field types commonly found in JSON, GeoJSON, and parquet files, such as arrays, maps, and struct fields. These field types contain lists and dictionaries of information that can be difficult to work with without the right tools. To better leverage the information stored in these field types, we’ve added the following new tools and enhancements:

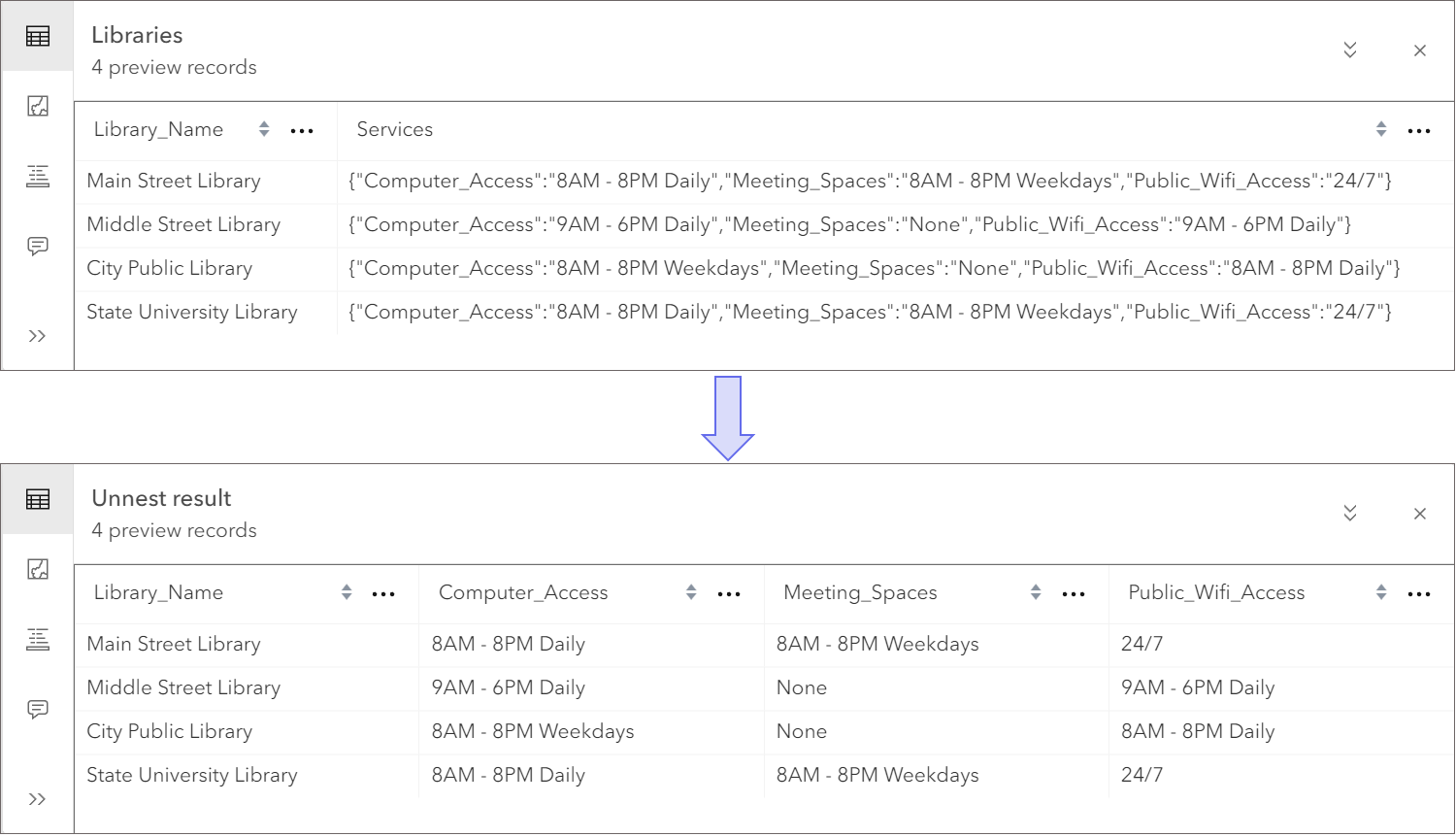

- The new Unnest field tool extracts fields and values from array, map, and struct fields. For example, let’s say that you have a dataset that represents the locations of libraries in your community. Each library record has a struct field value containing a dictionary of all the services the library offers and the hours of operation associated with each service. Use the Unnest field tool to return new fields containing the hours of operation for each service. The transformation from dictionary to new fields and values could look something like this:

- The Arcade editor, used with the Calculate field tool, now supports selecting array, map, and struct field types from the Profile variables list. Using the example above, you could extract only the computer access information using an Arcade expression such as

$record.Services["Computer_Access"].

- Select fields has been enhanced similarly to the Calculate field tool. Now you can browse into struct type fields and select only the values you want to keep. Again, using the example data from the image above, you could return only the

Public_Wifi_Accessfield by drilling into theServicesfield using the field picker.

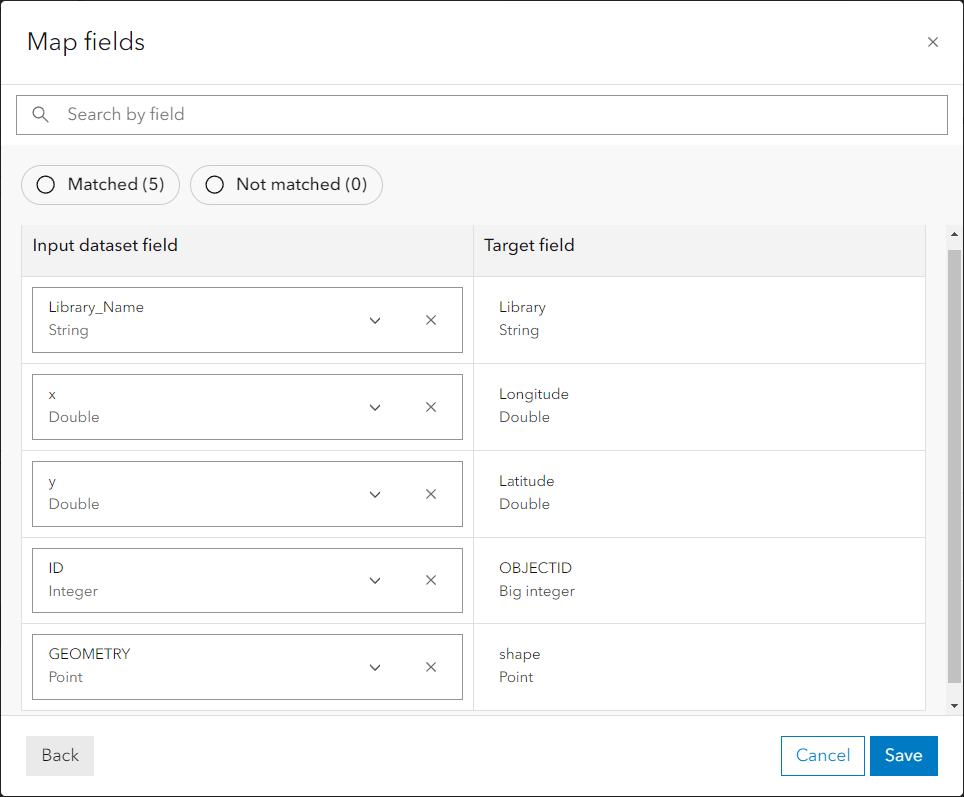

Map fields tool

With the new Map fields tool, you can now efficiently update and standardize dataset schemas. For example, Map fields is commonly used to prepare data for use in the Merge tool, or for matching fields to the feature layer you want to update using the output Feature layer tool. See the screenshot below for an example of how input dataset fields could be mapped to target fields.

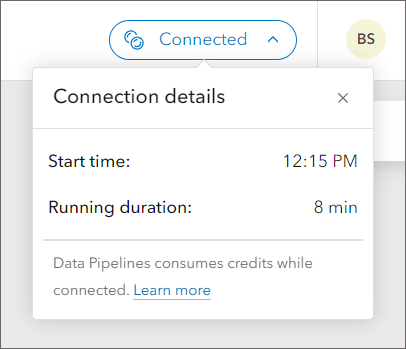

Connection details dialog

Another new feature is the Connection details dialog. Use this dialog to find out when the connection was initiated and how long it has been active. The learn more link in the dialog provides more information including how connections work and how credits are consumed while using Data Pipelines.

More information

We want to hear from you! There is much more to come for ArcGIS Data Pipelines in the future, and we value your opinion on what new features and enhancements we can add to help you with your data preparation workflows. Share your ideas or ask us a question in the Data Pipelines community.

More details on new enhancements and improvements with the February update can be found in the What’s new documentation. To learn more about the powerful data integration capabilities of Data Pipelines, check out the Introducing Data Pipelines in ArcGIS Online (beta release) blog post, the What’s New in Data Pipelines (October 2023) blog post, and refer to the product documentation.

Article Discussion: