Motor vehicle accidents resulted in more than 4.6 million injuries and 40,000 deaths in the United States in 2016, according to the National Safety Council (NSC), a US-based nonprofit organization.

While accidents can be traced to a variety of factors including distraction, fatigue, aggression, and impairment, the fact remains—far too many vehicular accidents occur on the roadways.

With recent advances in sensor technology implemented both in roadsides and vehicles, there is a belief that real-time alert systems will give drivers a greater awareness of accident potential and sufficient time to react.

One of the leaders in advanced driver assistance system (ADAS) technology is Mobileye, which develops camera-based products that have been embedded in millions of vehicles across the globe. The technology is not only providing critical road safety capabilities, but that same data is also being applied by municipalities to enhance their smart community initiatives.

Mobileye’s technology uses visual sensors that repeatedly scan and identify common highway features, obstacles, and conditions including lane markings, speed limits, road conditions, weather, pedestrians, accidents, obstructions, and other roadway-related information. Distances to these traffic constraints are continuously recalculated in real time and potential dangers are conveyed to the driver with visual and audio alerts. The system employs computer vision, an application of artificial intelligence that extracts cognitive information from digital images and videos that emulate how humans process and respond to visual information.

The technology deployed includes several traffic monitoring capabilities. The resultant safety features include autonomous emergency braking, blind spot monitoring, lane centering, forward collision warning, intelligent speed adaptation, night vision, pedestrian detection, road sign recognition, and other functions. The extensive amount of data collected to support these features is processed on the fly using onboard technology capable of performing trillions of mathematical calculations per second.

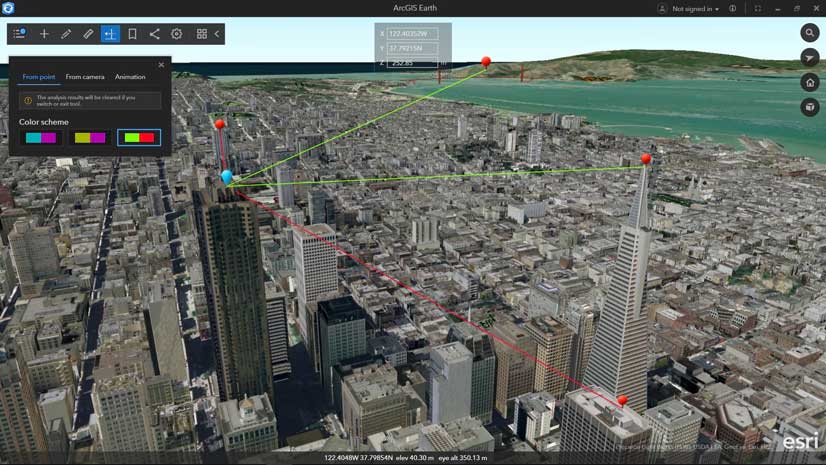

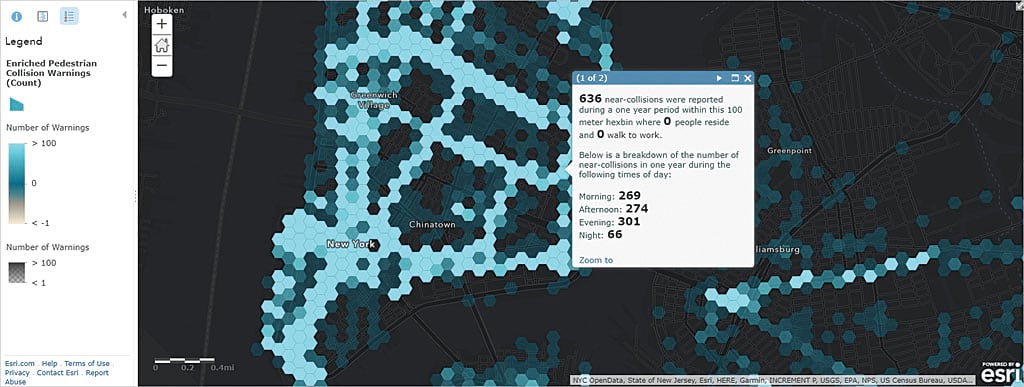

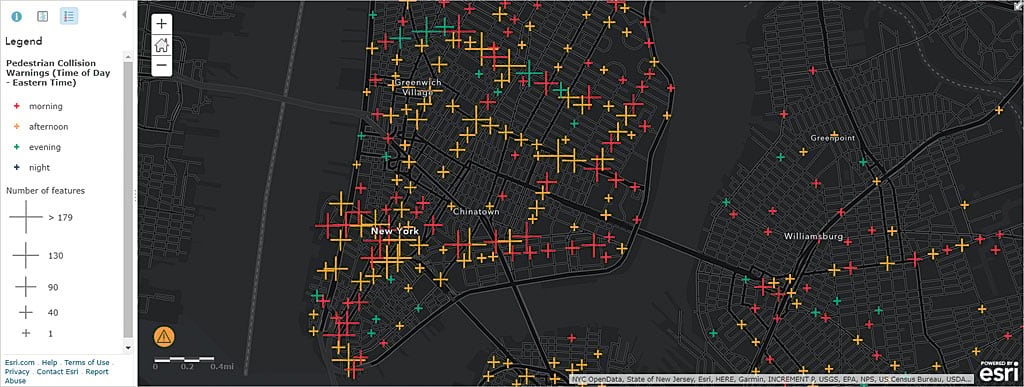

The spatial analysis capabilities in Esri’s software is used on the data collected by Mobileye’s ADAS to expand its functionality and provide cutting-edge location intelligence, refined visualization, and enhanced mapping capabilities. By synthesizing the data from this network of sensors into a common unified map, cities can now have a type of situational awareness that was previously unavailable.

“Vehicles equipped with this technology can function together as a fleet of powerful sensors that are actively moving around the city continually collecting imagery and data,” said Jim Young, the head of business development at Esri. “The data collected provides the opportunity for the real-time monitoring needed for a number of community initiatives including public safety and emergency response. We can provide it in a dashboard, overlaid with other data layers, to city officials to support better civic engagement.”

When combined with other geospatial data maintained by the city, this information can stimulate cross-disciplinary collaboration among local traffic planners and engineers, police officers, and policy makers in support of smart community initiatives. Vision Zero is one such initiative that is gaining support in cities throughout the world. It was first implemented in Sweden in the 1990s in an effort to eliminate traffic fatalities and severe injuries while increasing safe, healthy, equitable mobility for all.

“Currently, we are developing connected ADAS systems,” said Nisso Moyal, director of Business Development & Big Data at Mobileye. “What this means is that we will be able to alert drivers not only to a potential collision that has been detected by the onboard camera itself but also to dangerous conditions that are on the roadway ahead, such as a sharp curve or an accident 500 meters up the road that has been identified by another vehicle equipped with our technology.”

Mobileye is planning to make greater use of artificial intelligence in the autonomous car system it is developing so that the cars using the system can respond more quickly and intelligently in emergency situations as well as heavy traffic conditions. By analyzing and learning from the data it collects and the decisions it makes based on that data, the technology will go beyond rule-based decision-making and develop more human-like response skills.