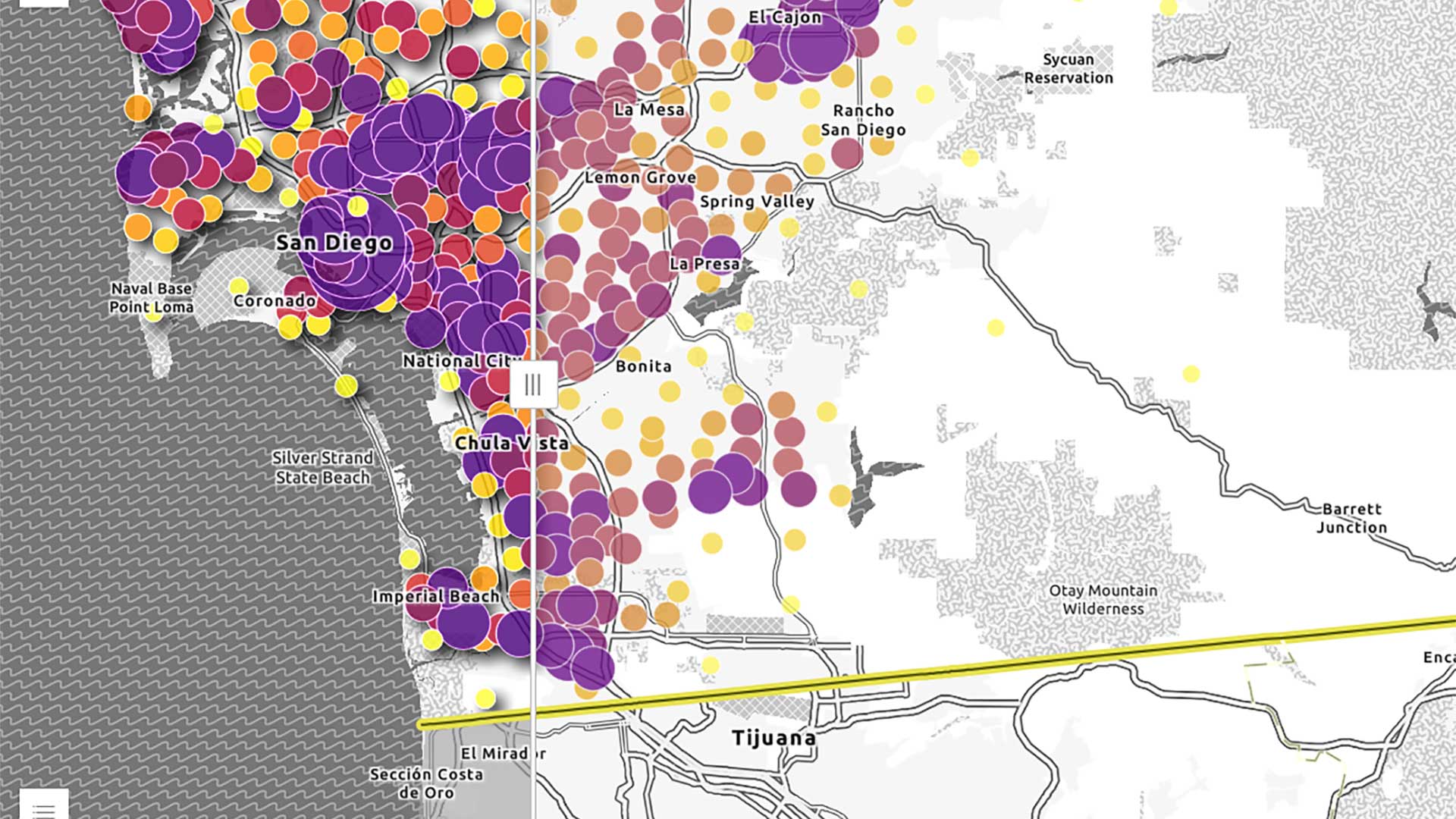

Interactive maps are very visual in nature. Apps can reach wider audiences when additional context—such as the map’s purpose or the data included in the map—is provided for audiences with low or no vision. The same strategies can also support lower internet speed connections that take longer to load content in an app.

While a fully sighted user can determine when a map has loaded on a page, users who rely on screen readers or other assistive technologies may not know when a map has loaded in the app. When content dynamically changes, its context can be provided to a user through assistive technologies with an Accessible Rich Internet Applications (ARIA) live region. [ARIA live regions provide a way to programmatically expose dynamic content changes, such as an update to a list of search results on the fly or a discreet alert that does not require user interaction, so that they can be announced by assistive technologies.]

Add a Live Region

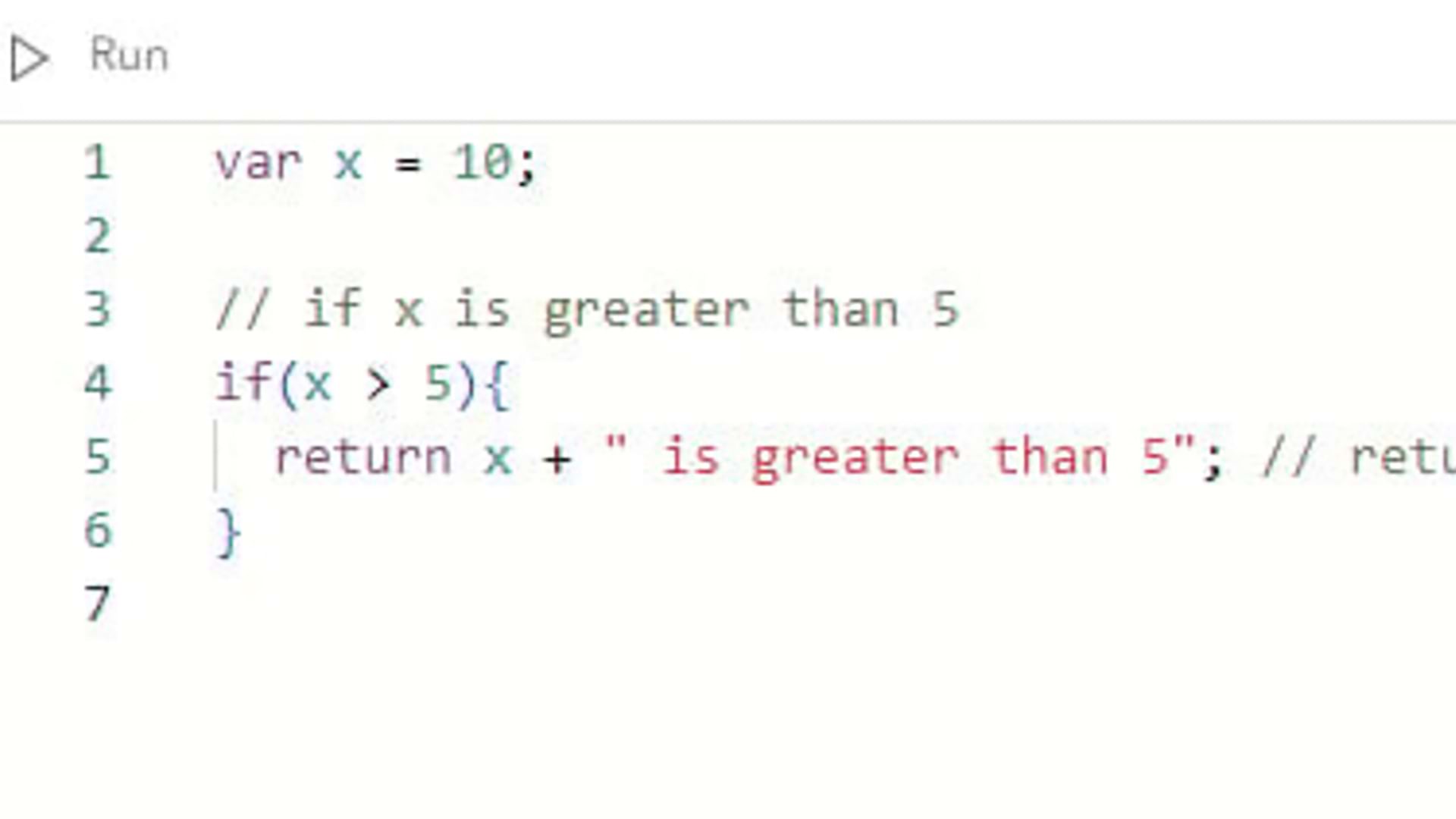

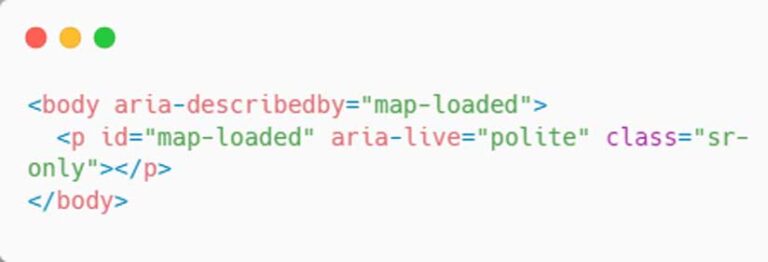

To provide similar on-demand context when the map has loaded to assistive technologies, a live region can be added to the map. Add an aria-describedby attribute to the parent element of the map, such as the document’s body. The aria-describedby attribute will be associated with another element’s id on the page, where the description will be provided. The element can also include the aria-live attribute set to polite so users receive the information when they are idle. (See Listing 1.) By adding an aria-describedby attribute, those navigating an app with assistive technologies will have the ability to better understand the content in the app.

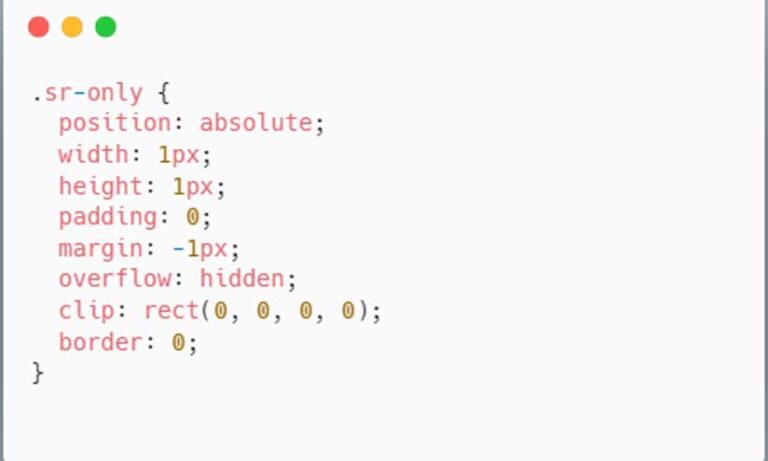

To visually hide the description from users, add a sr-only class to the element. The class will still contain the description’s text contents, but will be visually hidden in the app via CSS, as shown in Listing 2.

To use a similar strategy for low internet speeds, the CSS class as shown in Listing 2 could be omitted to provide both visual and descriptive information to users. This deals with messaging that cannot be read or that will be translated by assistive technologies.

Dynamically Add the Description

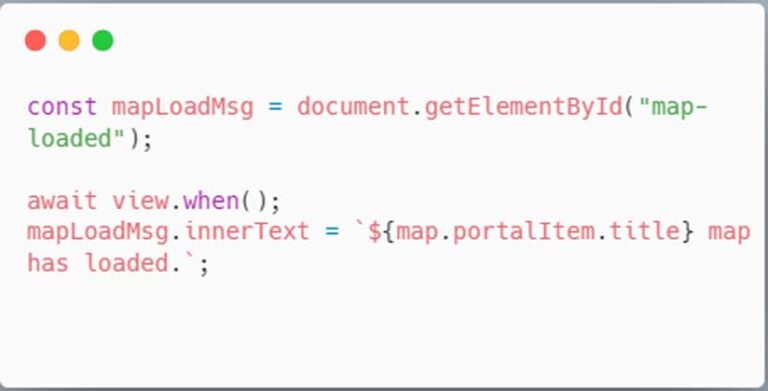

Update the live region with information from the web map after the view is ready with the web map’s title and add it to the live region as shown in Listing 3.

Create a Map Description

The map can also contain a description when a user is actively focused on the map with assistive technologies. Like a live region, a new element can be added to contain the map’s description. As shown in Listing 4, add the same sr-only class to the element, where the description will be visually hidden to users.

If an app contains a web map, the web map’s snippet or description can be used to update the map-description element, which provides additional context on the map’s purpose and contents to the user.

To add the description to the map view’s surface node, use getElementsByClassName to return elements from the esri-view-surface class. There is only one element, but the method returns an array of results. The spread (…) syntax iterates through the array, which applies the description to the element as shown in Listing 5.

Explore the Demonstration App

Access a demonstration app with the code (links.esri.com/democode) and app (links.esri.com/demoapp), available on GitHub.

To test the app:

1. Enable a screen reader, such as the free NVDA screen reader (https://www.nvaccess.org/) or JAWS (links.esri.com/jaws), both for Windows; or VoiceOver, which is included with MacOS.

2. Open the app in a browser window. For VoiceOver, Safari is the MacOS-supported browser.

3. Navigate into the app and explore the live region and map description.

Note: Not all assistive technologies support the aria-describedby attribute. For example, Narrator does not. For full supporting information of aria-describedby, visit a11ysupport.io.